Also known as “query strings” or “URL query parameters”, URL parameters are a means of tracking information and traffic to a website. They are the dynamic data inserted into the URL string after the query is executed in order to filter additional information about a given site, or to structure the site’s contents.

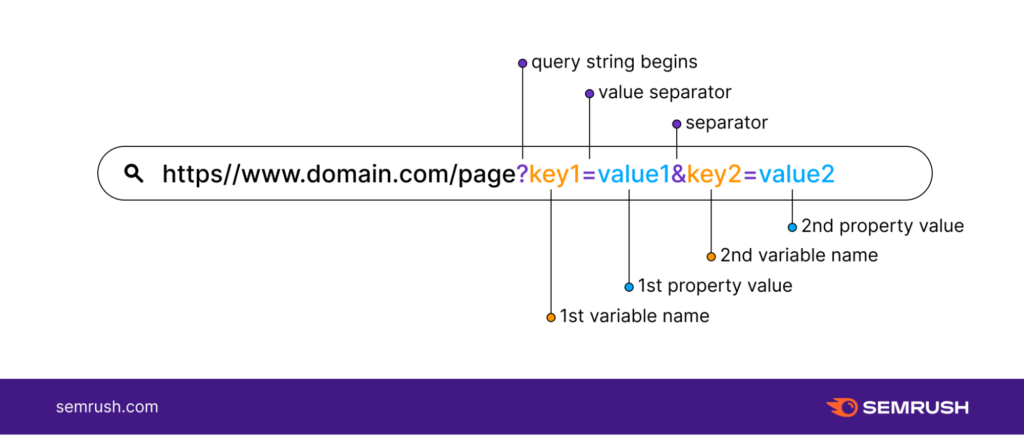

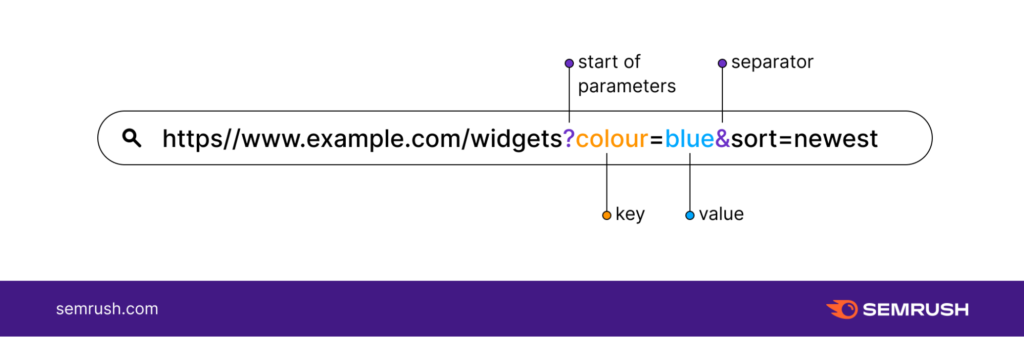

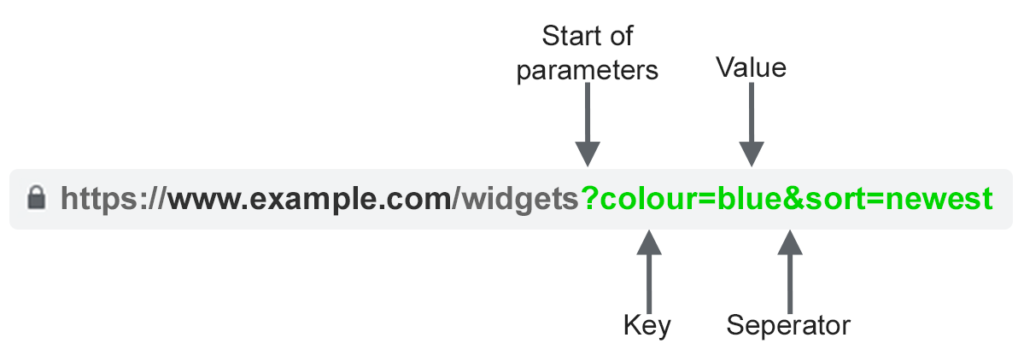

URL parameters are usually found in the string after the (?) symbol. They consist of a key and a value separated by the equal sign (=). However, multiple URL parameters can be added to a string by separating them with the ampersand symbol (&).

Query strings are an integral part of a website’s URL and are a great asset for SEO professionals

However, they can be the source of many problems for websites especially when ranking web pages in Google SERPs.

- How to solve the problems caused by the parameters?

- Isn’t it worth it to remove URL parameters?

In this article, I will explain all the details about the use of query strings from the definition to the different techniques to solve the problems of URL parameters.

Chapter 1: What is a URL parameter?

URL parameters are dynamic elements of a query string. A query string is the part of the URL that follows a question mark symbol (?).

Example: www.dynamicurl.com/query/thread.php?threadid=64&sort=date=64&sort=date

In this string, all elements that come after the (?) are called “URL parameters”.

1.1. What are URL parameters made of?

The constitution of a random URL string may seem very complicated at first sight. Especially the mixture of letters, numbers and symbols will surely give you the impression of a rather complicated code to decipher.

But in reality, you can make sense of all the elements or data that make up a URL string once you get to understand the anatomy of a URL parameter. Here are the different possible elements you can find in a URL string

- The question mark (?): The question mark marks the beginning of a URL parameter in a URL string. Example : www.dynamicurl.com/query/thread.php?threadid=64&sort=date=64&sort=date (?threadid=64&sort=date) ;

- The ampersand: Ampersands are used when there are several parameters in the URL string. The presence of an ampersand marks the end of one URL parameter and the beginning of another. Example : (?threadid=64& sort=date=64& sort=date) ;

Source : SemRush

- The variable name or “Key”: This is the title or label of the URL parameter. Example : ?threadid=64&sort=date=64&sort=date;

- The value: This is the specific value separated from the variable name by an equal sign (=). Example: ?threadid=64&sort=date=64&sort=date.

Source: SemRush

URL parameters are normally used to accomplish two main tasks: to improve the content of a page and to track click information via the URL. Based on these two types of usage, there are two main URL parameters: Active and passive URL parameters.

- Active URL parameters also called Content Modifying Parameters: This type of URL parameter is used to modify the content of a website’s pages.

Example

- Content reorganization: (?sort=price_ascending) “Display the contents in a particular order on a web page”.

- To segment the contents of a page: (?page=1, ?page=2, ?page=3). This URL parameter is used to segment a large content into three small parts.

- To limit the content: (?type=yellow). This URL parameter is used to display only “yellow” content on a web page.

To redirect a specific content (A) for example, you just have to insert in the webmaster its string: http://domain.com?productid=A

- Passive URL parameters: Unlike active URL parameters, passive ones do not modify the contents of a web page, but are rather used for click tracking through the URL. These parameters allow you to have all the information about the click, i.e. the origin, the campaign, etc. This data will be used when evaluating the performance of your recent marketing campaigns. Here are some examples of passive URL parameters.

Example

- Passive URL metrics can be used to identify visits or sessions to a site. To track the traffic of your email campaign, you can insert in the string: https://www.domain.com/?utm_source=emailing&utm_medium=email.

- URL parameters can be used to identify the origin and all data (source, medium and even campaign) related to a visit to a website. To collect data from a campaign: https://www.domain.com/?utm_source=twitter&utm_medium=tweet&utm_campaign=summer-sale.

- Finally, URL parameters can also act as affiliate identifiers. This feature is often used by bloggers and influencers to generate revenue per click.

1.2. how to apply URL parameters?

On online stores for example, URL query parameters are commonly used to make navigation easier for users. In fact, the parameters are executed in this case to sort and select pages of specific categories.

This allows the user to be redirected directly to products that may be of interest to them. They will also be able to display a set number of items per page by sorting the pages according to the filters.

On the other hand, passive URL parameters are used a lot by digital marketers. These metrics allow them to track where their website traffic is coming from in order to determine the reach or success of their latest investment in a digital marketing strategy.

Source: SemRush

Here are some of the most common URL metrics use cases:

- Content filtering: Choose which pages to display based on product colors and prices on an online store for example: ?type=widget, color=blue or ?price-range=20-50 ;

- Traffic tracking: ?utm_medium=social, ?sessionid=123 or ?affiliateid=abc ;

- Translation: ?lang=en, ?language=de or ;

- Reorganization of the contents of a web page: ?sort=lowest price, ?order=highest rated or ?so=newest;

- Identify a product in a category: ?product=small-blue-widget, categoryid=124 or itemid=24AU ;

- Segmenting the content of a page: ?page=2, ?p=2 or viewItems=10-30 ;

- Perform a specific search: ?query=users-query, ?q=users-query or ?search=drop-down-option.

Chapter 2: The various SEO problems caused by URL Parameters

As good and useful as they are, it is important for any SEO or SEO expert to moderate or stay away from the use of URL parameters as much as possible. Indeed, URL parameters tend to create a lot of problems that can impact a website’s SEO.

These problems are related to the excessive consumption of the crawling budget, thus preventing crawlers from browsing web pages and performing indexing. An error in the structuring of passive URL parameters, for example, can lead to the creation of duplicate content.

In this chapter, you will find the probable problems that could be an obstacle to the referencing of your site caused by the URL parameters.

2.1. Duplicate content creation

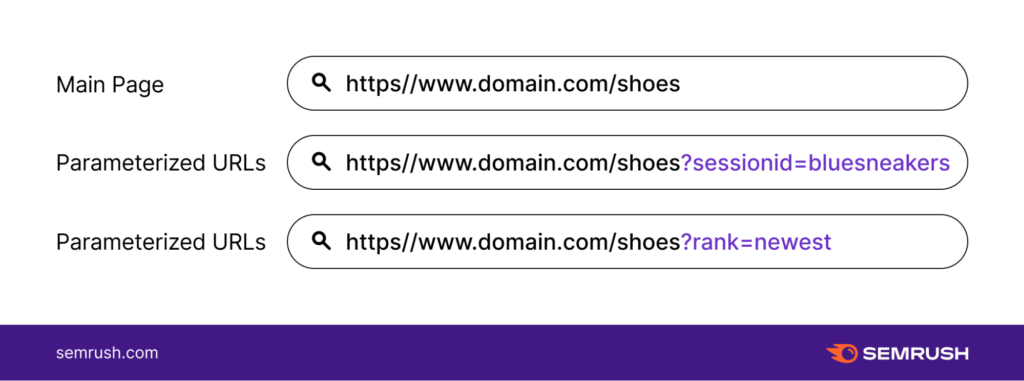

Google and other search engines consider each URL as an entire web page. As a result, multiple versions of the same page created with URL parameters will be considered independent pages and therefore duplicate content.

Using the active URL parameters to reorganize a web page does not make a big change to the original page. Some URL parameters used to reorganize a web page may even present exactly the same page as the original page.

Similarly, a web page URL with tracking tags or a traffic ID is identical to the original URL.

Example

- Static URL: https://www.example.com / widgets

- Tracking parameter: https://www.example.com/widgets ?sessionID=32764

- Reorganization parameter: https://www.example.com /widgets?sort=newest

- Identification parameter: https://www.example.com ?category=widgets

- Search parameter: https://www.example.com/products ?search=widget

You can see that there are several URLs that all have the same content. If you have an online store and you do this for each category, it will add up and you will have a huge number of URLs.

The problem is when Google’s crawlers visit your site. A found URL is considered as a new page by the robots. They will therefore treat each URL parameter as an independent page.

They will therefore discover during the exploration of duplicate content addressing the same semantic topic on its different URLs considered as different pages. No need to remind you that duplicate content is one of the offences punished by Google.

And even if the presence of these URL parameters that create duplicate content is not a sufficient reason for Google to ban your site from the SERPs, they can still cause keyword cannibalization.

2.2. wasting crawl budget

A simple URL structure is an asset for the optimization of your site. An adapted sitemap allows the indexing robots to easily browse the different pages of your site without consuming much bandwidth.

On the other hand, complex URLs containing many parameters cause the creation of many URLs pointing to the same content. This makes the structure of your website more complex and wastes the time of crawlers.

Crawling or crawling a site with a very complex structure can exhaust the crawling budget and reduce the ability of the crawlers to index your web pages to position them in the SERPs.

2.3. URL parameters lead to keyword cannibalization

Since the different parameters added to the original URL target the same content, thus the same keywords, the indexation can put in competition different pages targeting the same keywords.

This fact can lead the indexing robots to devalue these keywords. They may consider that all the pages containing these keywords do not bring any added value to the users.

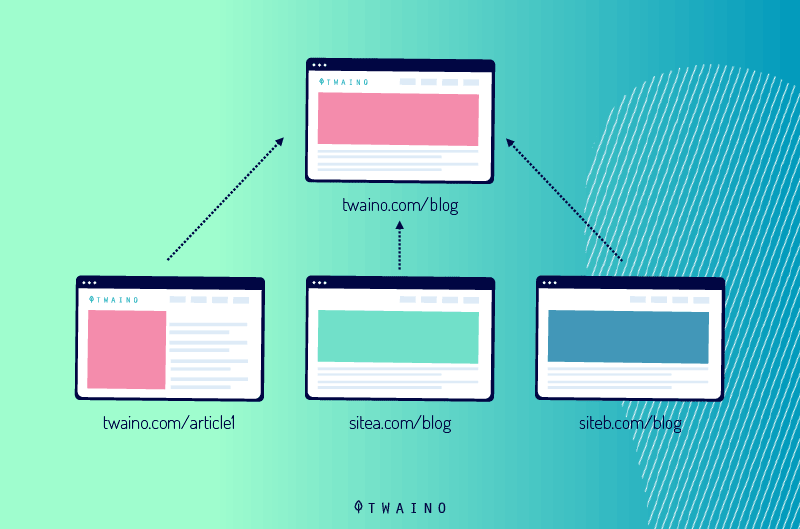

2.4. URL parameters dilute ranking signals

If you have multiple URL parameters pointing to the same content, visits from social media shares and links will go to any version of your page

Not only will this cause you to lose traffic, but your pages will not rank well.

In fact, the diversification of visits will confuse crawlers and thus dilute your ranking signals. The bots will become uncertain and will have a hard time knowing which of the competing pages should be positioned in the search results.

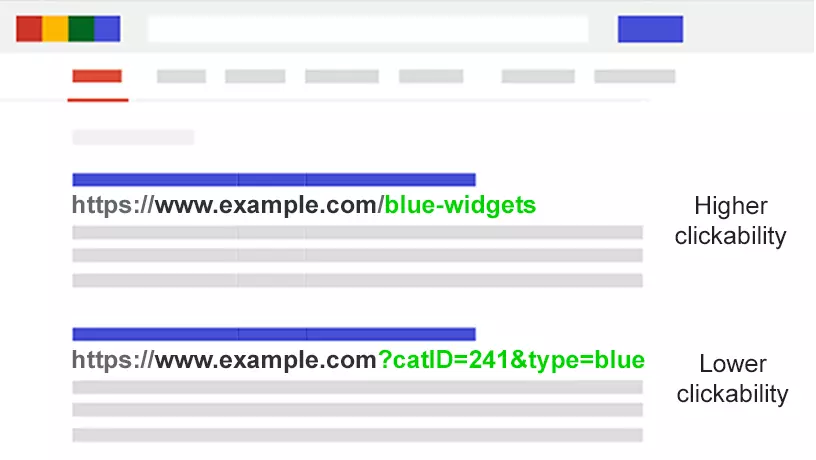

2.5. URL parameters make URLs less attractive

For the best optimization of your website, your URLs should be aesthetically pleasing, readable and simple to understand. A URL with parameters is usually complex and unreadable for visitors.

The presentation of a parameterized URL does not reflect trust because it looks spammy. Therefore, users are not likely to click on or share.

URL settings can thus have a negative impact on the performance of your web pages. For one thing, CTRs can be part of the reason for ranking.

Source : searchenginejournal

But your pages don’t benefit from this reason because the complexity of the URLs will slow down the click-through rate. Then, traffic from social networks, emails… will also decrease because users will be suspicious of your parameterized URL.

It should be noted that the implication of tweets sharing, emails … in the development of the reputation of a web page are not so minimal

Basically, poor readability of your website URLs can be an obstacle for your brand’s engagement with customers or internet users in general.

Chapter 3: How do you manage URL settings so that they don’t affect your site’s SEO?

Most of the SEO problems caused by URL parameters that I mentioned in the previous chapter all have one thing in common: crawling and indexing of parameterized URLs. However, this does not prevent the generation of new parameterized URLs on websites.

Find out how to solve URL parameters issues and create new URLs via parameters without causing SEO troubles to your web pages.

3.1. Determine the extent of URL settings problems

Before starting the process of solving URL parameters problems on a website, it is important to know all the parameters inserted in the site’s URL beforehand

Unless your developer has a complete list of all the URL parameters, it will be a real headache to count all the parameters inserted in the URL of your site.

Fortunately, you don’t have to panic about this

I will show you in five steps how you can find all the URL parameters as well as the behavior of the indexing robots.

- Use a crawler: By running a crawler like Screaming Frog, you can find a list of all the parameters used in your site’s URL. Just look for the symbol (?) in the URL and you will receive all the parameters.

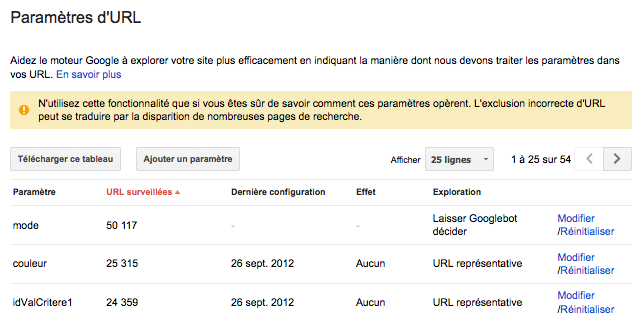

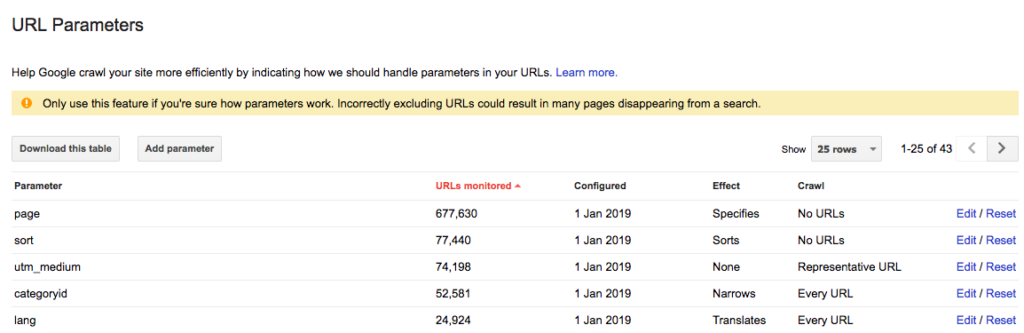

- Check in the Google Search Console, precisely in the URL parameters tool. Google automatically adds the query strings there. So you can find the URL parameters in the Search Console.

Source: WebRankInfo

- Analyze log files: You can also see in the log files if Google’s crawlers visited URLs with parameters.

- Make a combination query with: inurl. By inserting the key: inurl in a combination query like: example.com inurl:key, you can find out how Google’s crawlers index the URL parameters you found on your site.

- Search the Google Analytics report for all URL parameters: You can search for the (?) symbol in Google Analytics to see how your users interact with the URL parameters. But before that, it is important to first check if the URL parameters are present in the display setting.

With these different data, you can now think about how to manage each of the URL parameters.

3.2. Constantly check your crawl budget and limit the URL settings

The total number of pages that Googlebots or other search engine crawlers must visit on your site is called the crawl budget. The crawl budget is different from one site to another because not all sites have the same number of web pages.

It is therefore necessary to ensure that your crawl budget is not wasted. As we mentioned in the previous chapter, having many URLs set up on your site quickly depletes your crawling budget.

To manage this problem, you can do a quick analysis on the usefulness of each parameter you have generated for site SEO. This analysis will allow you to sort out the parameters and eliminate the less interesting ones.

Anyway, you will find a reason to reduce the parameterized URLs and thus save the crawling budget. You can follow the following process to reduce the number of URLs set on your site and minimize their impact on SEO.

3.2.1. Sort and eliminate unnecessary parameters

Start by requesting a complete list of all URL parameters generated on the site from your developer or use one of the methods outlined in subchapter 3.1. to obtain a list of all parameterized URLs on your site

In this list you will most likely find parameters that do not serve a beneficial purpose

You can also define evaluation criteria that will allow you to select which URL parameters are valuable and which are not

You can use the sessionID parameter, for example, to identify a target of users on your site at a given time.

This parameter will remain in the URL string even if you no longer use it. In fact, cookies are better at identifying users in general. The sessionID parameter is therefore no longer needed in the URL string. So you can eliminate it.

Similarly, you may find that one of the browser filters may never be used by visitors. All these unnecessary URL query parameters should be removed.

3.2.2. Limit values without specific functions

The parameters you need to add to your URL will be useful only if they have a specific function to perform. So you should avoid adding a parameter if its value is empty.

3.2.3 Inserting unique keys

Except in the case of multiple selection, you should avoid inserting several parameters with the same variable name (Key) with different values. For multiple selection options, it is even advisable to group the values after a single key.

3.2.4. Organizing URL parameters

It is true that the order in which the URL parameters are present does not matter much or exclude duplication of content. Even if you rearrange the parameters, the crawlers will still consider the different pages as identical.

However, each combination of the different parameters depletes the crawl budget and therefore prevents Googlebot from crawling your entire site to rank the deserved pages.

You can counteract this problem by requiring your developer to have a consistent order when writing URL parameter scripts. While there is no set order to follow, logically you should always start with the translation parameters, then theidentification parameters, then the pagination parameters, then the filtering overlay parameters and finally the tracking parameters.

Source: Affde

This method offers several advantages especially in the sense of referencing your site

These include:

- Guarantee a normal use of the crawl budget;

- Minimize duplicate content problems;

- Ranking signals are more consolidated on other pages of the site;

- Giving clarity and readability to your URL, which can encourage users to click and share your URL via social networks, emails, etc.

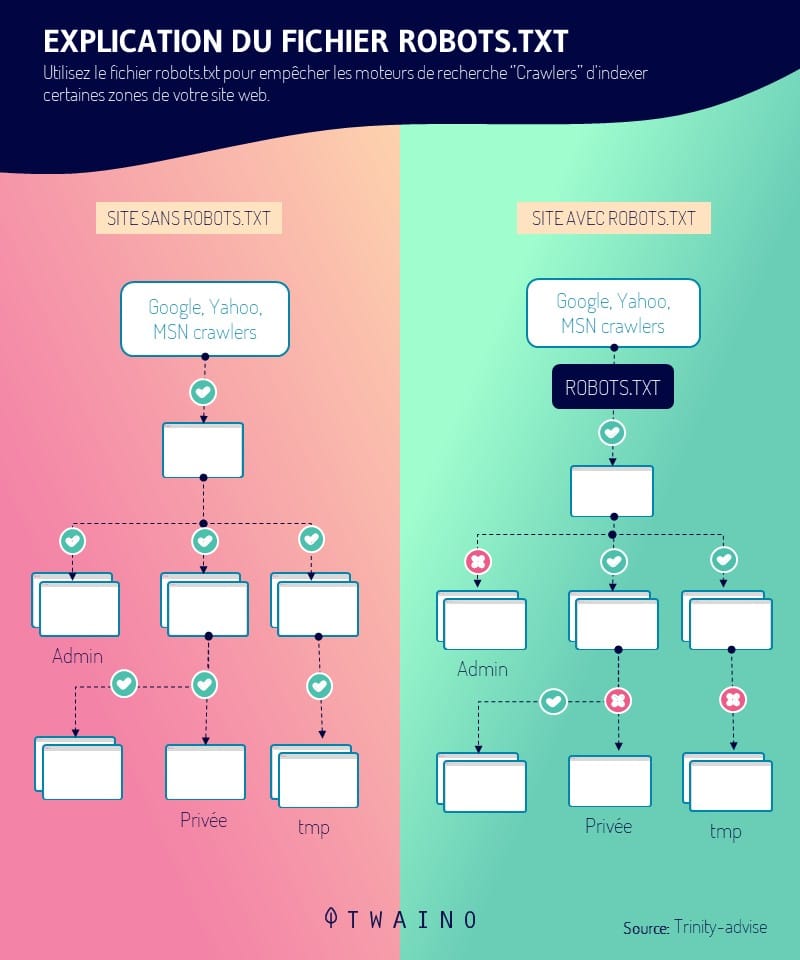

3.3. Blocking access to certain pages for indexing robots

The various URL parameters you create in order to sort and filter web pages can constantly generate URLs involving duplicate content

When you find that your website has a lot of URL settings, you may decide to block certain accesses to crawlers and indicate which pages should not be indexed.

You will need to insert the ban tag to prevent Googlebot from accessing sessions on your site with duplicate content. This is actually a technique that gives you the ability to control the different actions of crawlers on your site.

To do this, you will only need to insert the robots.txt file to show the search engines which pages should be crawled and which should not. But first, it is important to create a constant link that points to the static page that is not set up.

Since crawlers first look at the robots.txt file before they start crawling a website, this technique is a great way for you to optimize your most relevant parameterized URLs. The presence of the robots.txt file (Disallow: /??tag=*) or (Disallow: /*?*) for example will prevent access to any URLs with a question mark (?).

You must therefore make sure that the other parts of your URL do not also contain parameters, as this tag will prevent all sessions of the URL displaying this symbol. By using the robots.txt file to manage the crawler path, you get benefits such as

- Efficient and effective use of the crawl budget;

- Easy and simple technical implementation;

- Limiting the problems of duplicate content;

- Applicable to any type of URL parameters.

3.4. insert canonical tags on parameterized URLs

After sorting out the URL parameters and finally deciding the static page that needs to be optimized and positioned in the SERPs, you can canonicalize the parameterized URLs displaying duplicate content

Canonical tags rel=”canonical” are link attributes that tell crawlers that a web page has duplicate or similar content to another page on the same site.

These signals allow robots to save crawl budget and consolidate indexing efforts on relevant URLs as canonical. As for the relevant URLs you want to index, you can organize them and track the SEO by inserting the rel=canonical tag.

However, this technique only works properly when the content of the set URL page typically looks like the canonical page. By canonicalizing your URLs, you get the benefits such as:

- Consolidation of positioning signals to static or canonical page URLs;

- Protection of your site from duplicate content issues.

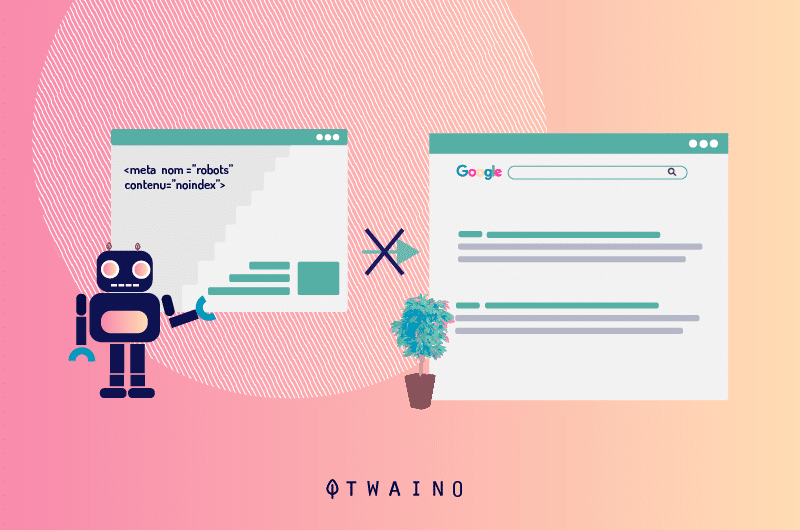

3.5. Use a Noindex Meta Robots tag

If you specify a noindex directive for pages with parameterized URLs that have duplicate content or add no SEO value, search engine crawlers will no longer be able to index these pages.

However, the presence of a “noindex” tag in a URL for a long period of time can also cause search engines to stop following these links.

3.6. Manage URL settings in Google Search Console

When URL settings tend to hurt your site’s SEO, Google will send you a warning in Search Console that the settings could cause many of your pages to be downgraded in search results

Most alarming are the pages with duplicate content that the settings cause.

It would be most beneficial to learn and master the configuration of URL parameters in Search Console. This knowledge will allow you to ensure the integrity and authority of your site instead of letting Googlebot “decide”

The most important thing is to know how the parameters affect the content of your web pages.

- recommendation 1: Set up tracking or passive URL parameters as representative URLs. Passive or tracking parameters do not change the content of web pages. You can therefore prefer them to active parameters.

- recommendation 2: Insert active parameters that organize the content of the web pages as “sorting”. If the parameter is used by the visitor, set Googlebot to “No URL“.

In case the active URL setting is used by default, you can set it to “Only URLs with value” while inserting the default value.

- recommendation 3: Set crawling to “No URLs” for filters that are irrelevant or have no SEO benefit. But if you feel they serve a purpose, you can set the crawl to “Every URL“. Filter settings that are selected as interesting can in turn be set to “shrink”.

- recommendation 4: Settings that expose site elements or a group of content as “specific” should be set to “Every URL“. Normally, these parameters should embody a static URL.

- recommendation 5: You should configure settings that expose a translated version of your web page content as “translated“. If the translation is not usually done through subfolders, it would be better to set these parameters to “Every URL“.

- recommendation 6: Configure settings that display small sequences of content as “Pagination”. You can set the crawl to “No URL” to save the crawl budget. This is beneficial if you have an XML site structure that promotes indexing of your pages. Otherwise, set crawling to “Every URL” to help crawlers get through.

In any case, there will be parameters that Google will add by default under the value: “Let Googlebot decide“. Unfortunately, these settings cannot be removed.

Source: Affde

So it’s better to configure proactive URL settings yourself. You can decide at any time to delete these settings in Google Search Console, if they no longer serve a purpose

NB: The settings you have configured to “No URL” on Google should also be added to the “Ignore URL settings” tool in Bing.

3.7. Convert dynamic URL to static URL

In outlining the various problems that URL settings can cause on a website, most people consider banning all settings as a solution. It is true that this is an alternative, but don’t forget that sub-folders are a help to Google.

They help the search engine to understand the structure of the site. In addition, static URLs with relevant keywords are valuable pieces that contribute to the SEO of the website. To take advantage of its URLs, you will use URL rewriting techniques on the server side to transform the parameters into subfolder URLs.

Example:

Parameterized URL: www.example.com/view-product?id=482794

URL after rewriting: www.exemple.com/widgets/bleu

This technique works well especially with URL parameters containing relevant keywords from search engine queries. But, this method becomes a problem for search parameters.

Indeed, each query made by the user would generate a new static page that will become a competing page to the canonical page for ranking. Or, when a user comes looking for something that is not available on your site, the new page would present crawlers with low quality content that may not be relevant to the query.

Similarly, converting dynamic URL settings to static settings in occurrence for things like pagination, site search results, or sorting, does not fix the duplicate content problem or save crawl budget.

Summary

Basically, URL parameters are a cornerstone for the functioning of websites

Although they present many SEO problems such as creating duplicate content, wasting crawl budget…, experienced SEO professionals who master their functioning perfectly benefit more.

In this article, I first explained what a URL parameter means while exposing the possible problems they can cause on your site to negatively impact its ranking

The different solutions to the problems related to these query strings that I have detailed in the last position will help you to easily integrate parameters to the URL of your site.

The best thing about these methods is that you can have the parameters on your site without fearing the risk of losing your ranking in the SERPs.

Did you like this article? Do you have any questions about how query parameters work? Don’t hesitate to ask me your question in the comments!