The Budget crawl is defined as the limit of pages that Google has set to explore on a website for its referencing. During the exploration, the search engine tries to control its attempts not to overload the server of the site, but while taking care not to ignore the important pages. To find the right balance, Google will therefore assign a different Budget crawl to each website based on some criteria such as: The responsiveness of the server, the size of the site, the quality of content and the frequency of updates

The Web is in full extension, the SEO too. In 2019 alone, more than 4.4 million blog posts were published on the net every day.

While Internet users enjoy consulting this content, search engines are faced with a major challenge: How to organize resources to crawl only relevant pages and ignore spammy or poor quality links

Hence the notion of crawl budget

- What is it concretely?

- How important is it for a website?

- What are the factors that affect it?

- How to optimize it for a website?

- And how to monitor it?

These are some of the questions we will answer clearly and concisely throughout this mini-guide

Let’s go!

Chapter 1: Budget crawl – Definitions and SEO importance

To properly develop this chapter, we will approach the definition of the crawl budget from two angles

- A definition seen from the side of SEO experts

- And what Google really means by this term

1.1 What is the crawl budget?

The crawl budget (or exploration or indexation budget) is not an official term given by Google

Rather, it originates from the SEO industry where it is used by SEO experts as a generic term to designate the resources allocated by Google to crawl a site and index its pages

In simple terms, the crawl budget of a website refers to the number of pages that Google expects to crawl on the site within a given time frame

It is a predetermined number of URLs that the search engine orders to its indexing robotgoogleBot, to consider when it visits the site

As soon as the limit of the crawl budget is reached, GoogleBot stops exploring the site, the rest of the non-indexed pages are automatically ignored and the robot leaves the site

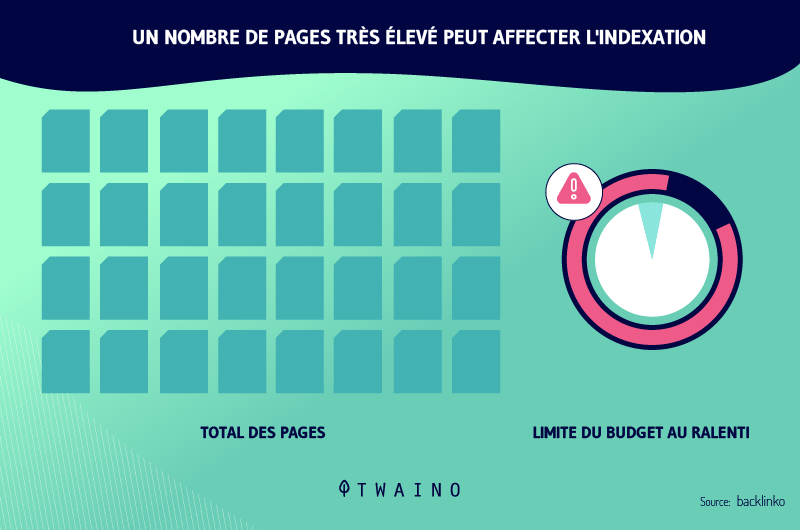

This number of pages to index can vary from one site to another because not all sites have the same performance or the same authority. A fixed crawl budget for all websites could be too high for one site and too low for another

The search engine has therefore decided to use a number of criteria before setting the time that its indexing robot on each site and the number of pages to crawl

Regarding these criteria, there is not an exhaustive list, as for many things related to thealgorithm algorithm

Nevertheless, here are some of these criteria known by all

- The performance of the site a well optimized website with a fast loading speed will probably get a higher crawl budget than a site that is slow to load

- The size of the site the more content a site contains, the higher its budget will be

- The frequency of updates a site that publishes enough quality content and regularly updates its pages will get a considerable crawl budget.

- The links on the site the more links a site contains, the higher its crawl budget will be and the better its pages will be indexed.

Ideally, the crawl budget should cover all pages of a site, but unfortunately, this is not always the case. That’s why it is important to optimize the above mentioned criteria to maximize your chances of getting a large crawl budget

It must be said that until now, the definition of this term has been approached from the point of view of a site owner or a web SEO expert who is in charge of working on the SEO of a website

But, when the question of “what is budget crawl” was addressed to Gary Illyes, an analyst at Google, his answer was much more nuanced

1.2 What does Google really mean by budget crawl?

In 2017, Gary Illyes addressed the question by detailing how Google understands budget crawl In his answerin his answer, we could distinguish 3 main important parts

- The concept of crawl rate

- The demand of exploration

- And some other factors

1.2.1. The crawl rate

During its exploration, Google fears to overwhelm each site crawled and blow up its server. This is not surprising, as we know that the search engine has always been protective and advocates a good user experience on websites

The words of Gary Illyes confirms this once again

“Googlebot is designed to be a good citizen of the Web. Crawling is its top priority while making sure it doesn’t degrade the experience of users visiting the site. We call this the ‘crawl rate limit’, which limits the maximum retrieval rate for a given site.”

So if GoogleBot sees signs on a site that its frequent crawling could affect the site’s performance, then the indexing robot slows down its crawl time as well as its visit frequency

As a result, in some cases, some pages of a website can end up not being indexed by GoogleBot

Conversely, if the indexing robot receives encouraging signs such as fast responses from the server, it facilitates the work and GoogleBot may decide to increase the duration and frequency of its visits

1.2.2. The request for exploration

Regarding the demand for exploration, Gary Illyes explains

“Even if the limit of exploration speed is not reached, if there is no demand for indexing, there will be low activity of Googlebot”

Source: Tech Journey

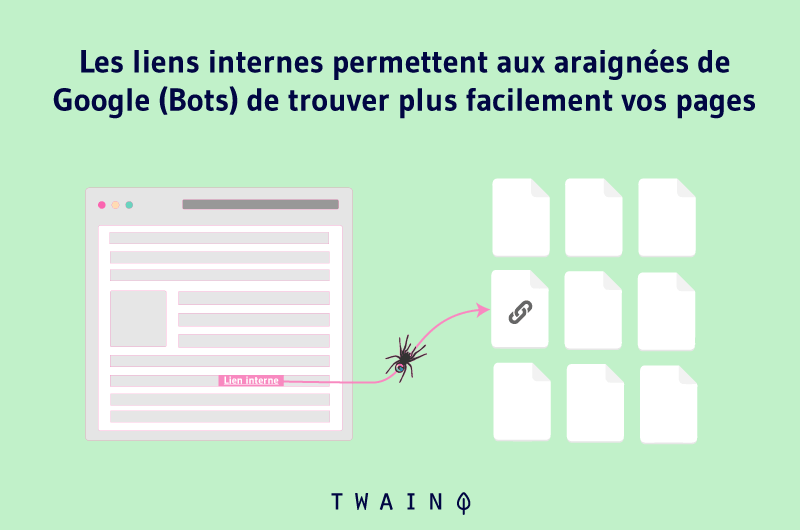

Simply put, GoogleBot relies on the crawl demand of a site to decide if the site is worth revisiting

This demand is influenced by two factors

- URL popularity the more popular a page is, the more likely it is to be crawled by the crawlers.

- Obsolete URLs google also tries not to have obsolete URLs in its index. So the more a site contains old URLs, the less its pages will be indexed by GoogleBot

Google’s intention is to index recent or regularly updated contents of popular sites and to leave out obsolete ones. This is its way of keeping its index always up to date with recent content for its users

The other factors include everything that could improve the quality of the contents of a site as well as its structure. This is an important aspect that Gary Illyes wanted to focus on

Moreover, he recommends that webmasters avoid certain practices such as

- The creation of poor quality content;

- The implementation of certain style of navigation to facets;

- The duplicated content ;

- And so on

We will come back to these factors in more detail in a later chapter. According to Gary Illyes, by using such practices on your site, you are in a way wasting your crawl budget

Here are his words

“Wasting server resources on pages like these will drain crawl activity from pages that are actually valuable, which can significantly delay the discovery of quality content on a site.”

To better understand what Gary is getting at, let’s say for example that you have a popular e-commerce store that specializes in selling video game consoles and all the accessories

You decide to create a space on the site where your users can share their experiences with your products, a sort of large and active forum. What do you think will happen?

Well, because of this forum, millions of low-value URLs will be added to your link profile. Consequence: These Urls will unnecessarily consume a good part of the crawl budget that Google has allocated to you. As a result, pages that really represent value for you may not be indexed.

Now, you have a pretty clear idea of what the crawl budget is, but concretely how important is it for a website?

Chapter 2: The importance of the crawl budget for SEO

An optimal crawl budget represents a great importance for websites since it allows to quickly index important pages

As Gary Illyes pointed out, a waste of your crawl budget will prevent Google from analyzing your site efficiently. The indexing robot could spend more time on unimportant pages at the expense of the ones you really want to rank for

This would reduce your ranking potential since each non indexed will not be present in Google search results. This page will then lose all probability of being consulted by Internet users from a SERP

Imagine producing interesting content with beautiful and illustrative visuals. Then you go even further by optimizing the Title, Alt, Hn tags and many other factors, just like a knowledgeable SEO expert would do

But if in the end, you ignore the optimization of the crawl budget, I am afraid that Google’s indexing bots won’t reach this quality content and neither will the users. Which would be a shame considering all the SEO efforts that have been made

However, it should be noted that optimizing the crawl budget is particularly important only for large websites

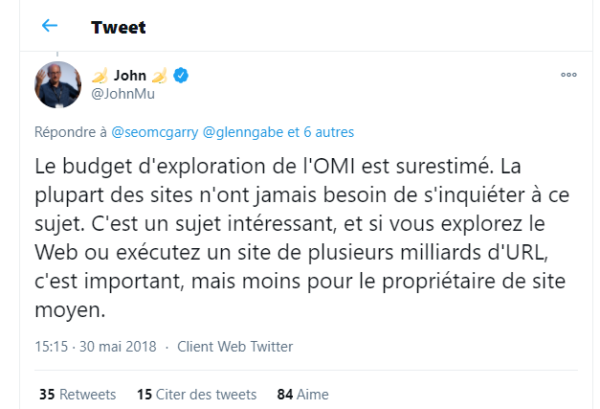

John Mueller, another Google analyst mentioned in a tweet in these words

“Most sites never need to worry about this. It’s an interesting topic, and if you’re crawling the web or running a multi-billion URL site, it’s important, but less so for the average site owner.”

As you can see, the crawl budget is for large websites with billions of URLs like the famous online shopping platforms for example

These sites can really see a drop in traffic if their crawl budget is not well optimized. As for other sites, Google remains less alarmist and reassures that they should not worry too much about problems related to the crawl rate

If you are a small local site or you have just created your website, this is news that makes you breathe a sigh of relief

However, you don’t lose anything by wanting to optimize your crawl budget, especially if you plan to expand your site in the future

On the contrary, many of the methods used to optimize the crawl rate are also used to optimize the ranking of a site on Google pages

Basically, when a company tries to improve their site’s crawl budget, they are also improving their position on Google

Here’s a list of some SEO benefits that a web site gets by optimizing its crawl budget, even if it doesn’t have any problems in this area

- Getting GoogleBot to crawl only the important pages of the site

- Have a well structured sitemap

- Reduce errors and long redirect chains

- Improve site performance

- Updating the content of the site

- And so on

With all these advantages, there is no doubt that the crawl budget is of great importance for a website

But in order to succeed in its optimization, I want to present you some negative factors that you should pay attention to before starting

Chapter 3: Factors that affect the crawl budget

Here are some factors that can negatively affect the crawl budget of a site

3.1. Faceted navigation

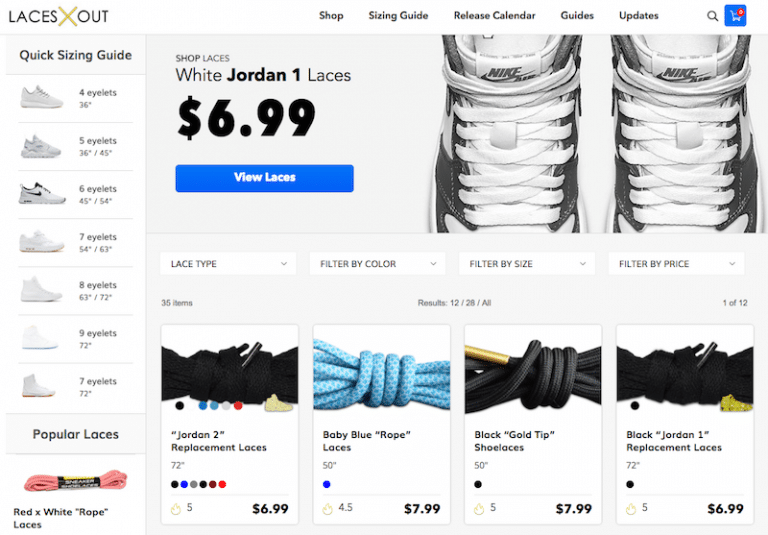

This is a form of navigation that is often found on online store sites. The faceted navigation allows the user to choose a product and then have other filters on the page to choose the same product

It can be a product available in several different colors or sizes. The thing is, this way of organizing the results requires adding URLs to the page

And each new URL added just displays a snippet of its original page, not new content. This is a waste of the crawl budget for the site

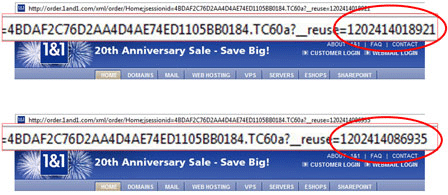

3.2. URLs with session IDs

The technique of creating session identifiers in URLs should not be used anymore, because with each new connection the system automatically generates a new URL. This creates a massive amount of URLs on a single page

Source Pole Position Marketing

3.3. Duplicate contents

If there is a lot of identical content on a site, Googlebot can crawl all the pages involved without coming across any new content

The resources used by the search engine to analyze all these identical pages could be used to crawl URLs with relevant and different content.

3.4. Software error pages

Unlike 404 errors, these errors do point to a functional page, but not the one you want. Since the server cannot access the actual content of the page, it returns a 200 status code

3.5. Pirated pages

Google always makes sure to provide the best results to its users, which of course excludes hacked pages. So if the search engine spots such pages on a site, the indexing is stopped to prevent these pages from ending up in the search results.

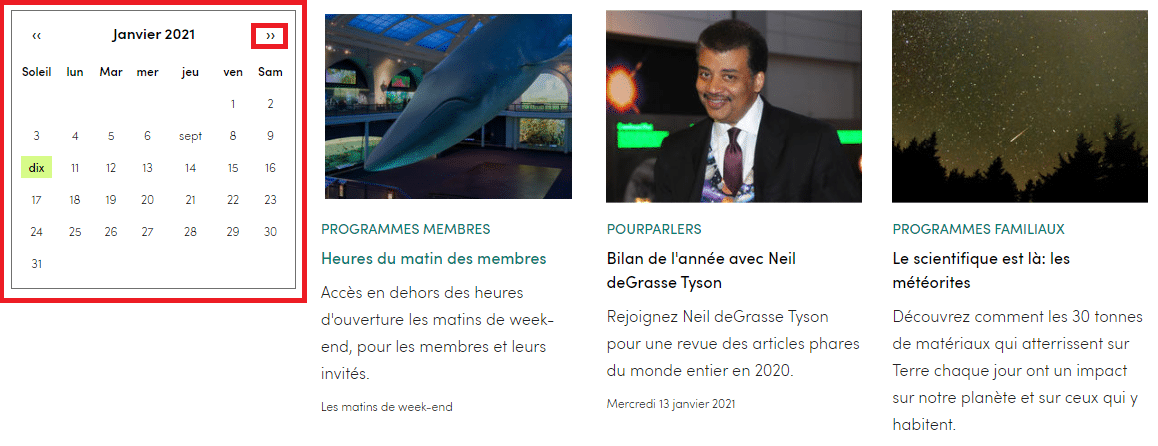

3.6. Infinite spaces

There are infinite spaces that can be found on a site. Let’s take the case of a calendar where the “next month” button is programmed to actually send to the next month

When crawling, Googlebot could fall into a kind of infinite loop and empty the site’s crawl budget by constantly searching for “next month”

Every time a user clicks on the right arrow, a new URL is created on the site, making for an astronomical number of links on the one page

3.7. Spammy or low quality content

Google tries to have in its index only quality contents that bring a real added value to Internet users

So, if the search engine detects poor quality content on a site, it gives it very little value

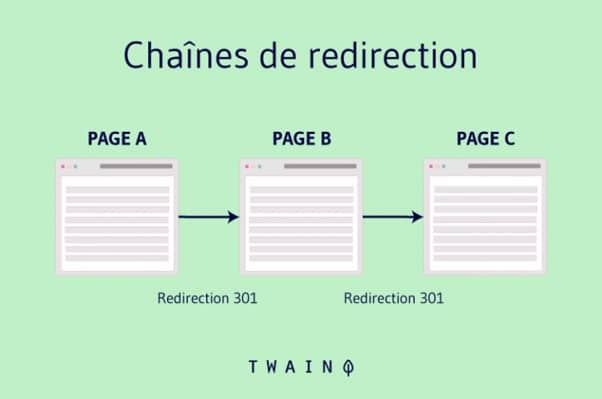

3.8. Redirect chains

When for one reason or another, you create chains of redirect chainsgooglebot has to go through all the intermediate URLs before reaching the real content.

The robot still can’t tell the difference, so it treats each redirect as a separate page, which unnecessarily drains the crawl budget allocated to the site.

3.9. The cache buster

Sometimes files like the CSS file can be renamed like this: style.css?8577484 The catch with this naming scheme is that the “?” sign is automatically generated every time the file is requested.

The idea is to prevent users’ browsers from caching the file. This technique also generates a massive amount of URLs that Google will process individually. This affects the crawl budget.

3.10. Missing redirects

In order not to lose a part of its audience, a site can decide to redirect its non-www version to a www version exactly like twaino.com to www.twaino.com or vice versa

It may also want to migrate from hTTP protocol to HTTPS. However, if these two types of general redirects are not properly configured, notice that Google might crawl 2 or 4 URLs for the same site:

http://www.monsite.com

https://www.monsite.com

http://monsite.com

https://monsite.com

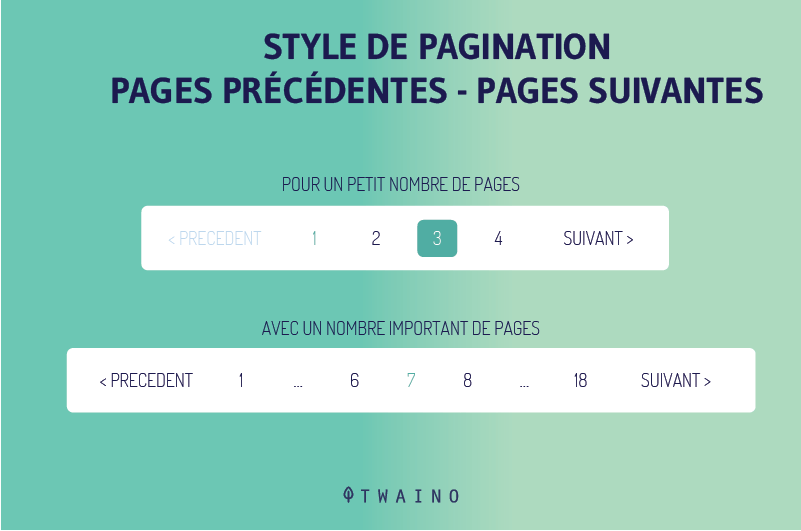

3.11. Bad paging

When the pagination is just composed of buttons that allow navigation in both directions, without any numbering, Google needs to explore several pages in this direction before understanding that it is a pagination

The pagination style that is recommended and does not waste the crawl budget is the one that explicitly mentions the number of each page.

With this pagination style, Googlebot can easily navigate from the first page to the last page to get an idea of which pages are important to index.

If you detect one or more of the factors mentioned so far, it is important to optimize your crawl budget to help Googlebot index the most important pages for you.

But how to optimize it?

Chapter 4: How to optimize the crawl budget?

Now that the basics have been established, we can start optimizing the crawl budget itself

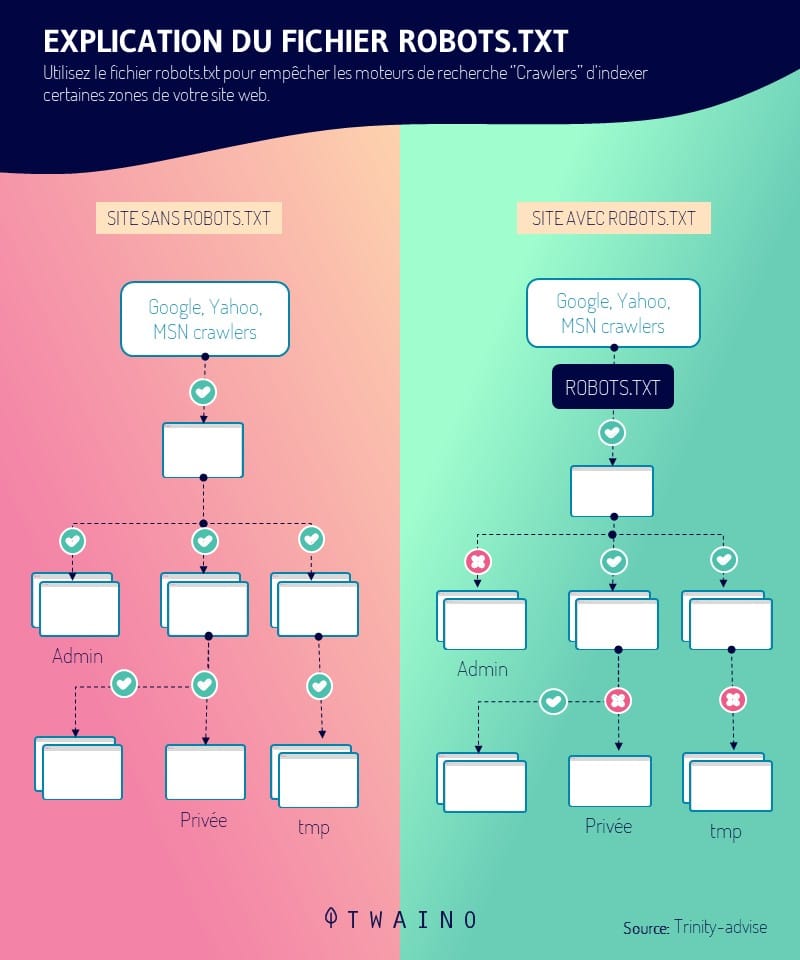

4.1. Allow crawling of your important pages in the Robots.Txt file

This first step of the budget crawl optimization is probably the most important of all

You have two ways to use your robots.txtfile, either directly by hand or with the help of an SEO audit tool

If you’re not a programming buff, the tool option is probably the easiest and most effective

Take a tool of your choice, then add the robots.txt file. In just a few seconds, you can decide to allow or disallow crawling of any page on your site

Find out how to do it in this guide entirely devoted to robots.txt

4.2. Avoid redirect chains as much as possible

You can’t definitely avoid using redirect chains because in some circumstances, it is an approach that can be useful. However, ideally, you should only have one redirect on a website

But, admittedly, this is almost impossible for large sites. At some point, they are forced to use 301 and 302 redirects

However, setting up redirects on the site at all times will severely damage the crawl budget and even prevent Googlebot from crawling the pages you want to be listed for.

Be content with just a few redirects, one or two and preferably short chains

4.3. Use HTML code as much as possible

Google’s crawler has gotten much better at understanding content in Javascriptbut also Flash and XML

But other search engines can’t say the same. So to allow all search engines to fully explore your pages, it would be wiser to use HTML which is a basic language, accessible to all search engines

Thus, you increase the chances of your pages being crawled by all search engines

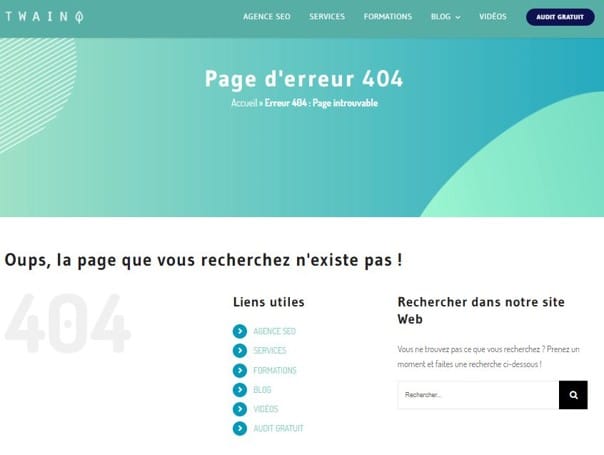

4.4. correct HTTP errors at the risk of losing your crawl budget unnecessarily

If you didn’t know it, the 404 errors and 410 errors literally eat away at your crawl budget. Even worse, they degrade your user experience

Imagine the disappointment of a user who rushes to check out a product you sell or content you’ve written and ends up on a blank page, with no other alternative

This is why it is important to correct HTTP errors for the health of your site, but also for the satisfaction of your visitors

As for redirection errors, it would be more practical to use a dedicated tool to spot these errors and fix them if you are not very fond of programming

You can use tools like SE Ranking, Ahrefs, Screaming Frog.. to perform an SEO audit of your site

4.5. Set up your URLs properly

If you set up different URLs to access the same page, Google will not understand it that way. For the search engine, each page is identifiable by one and only one URL

And so Googlebot will index each URL separately, no matter if there are several of them redirecting to the same content. This is a waste of the crawl budget

If you have such a situation on your site, you should notify Google

This is a task that has a double advantage, on the one hand your crawling budget is saved and on the other hand you no longer have to fear a penalty from Google for your duplicate content

Find out how to manage your duplicate content by consulting this article which shows in detail the procedure to follow

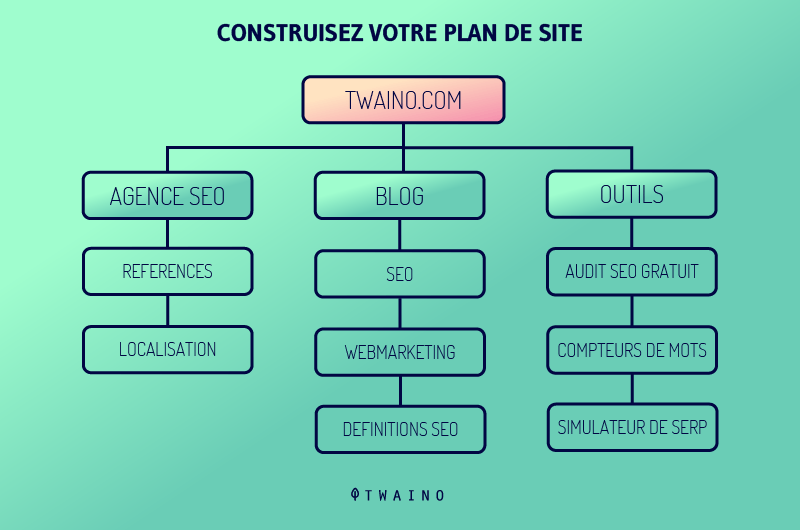

4.6. Keep your sitemap up to date

It is important to keep your XML sitemap up to date. This allows crawlers to understand the structure of your site, making it easier to crawl

It is recommended to use only Canonical URLs for your sitemap

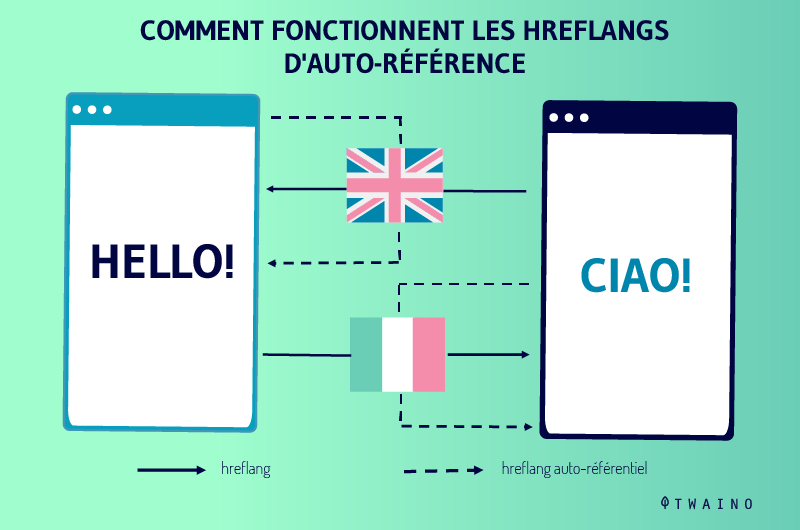

4.7. Hreflang tags are essential

When it comes to crawling a local page, crawlers rely on hreflang tags. This is why it is important toinform Google of the different versions you have

Find all the information about the Hreflang tag in this mini-guide.

That was 7 tips to optimize your site’s crawl budget, but it doesn’t end there. It is also useful to monitor how Googlebot crawls your site and accesses your content

Chapter 5: How to monitor your crawl budget?

To monitor the crawl budget of your site, you have two tools to do so Google Search Console and the server log file

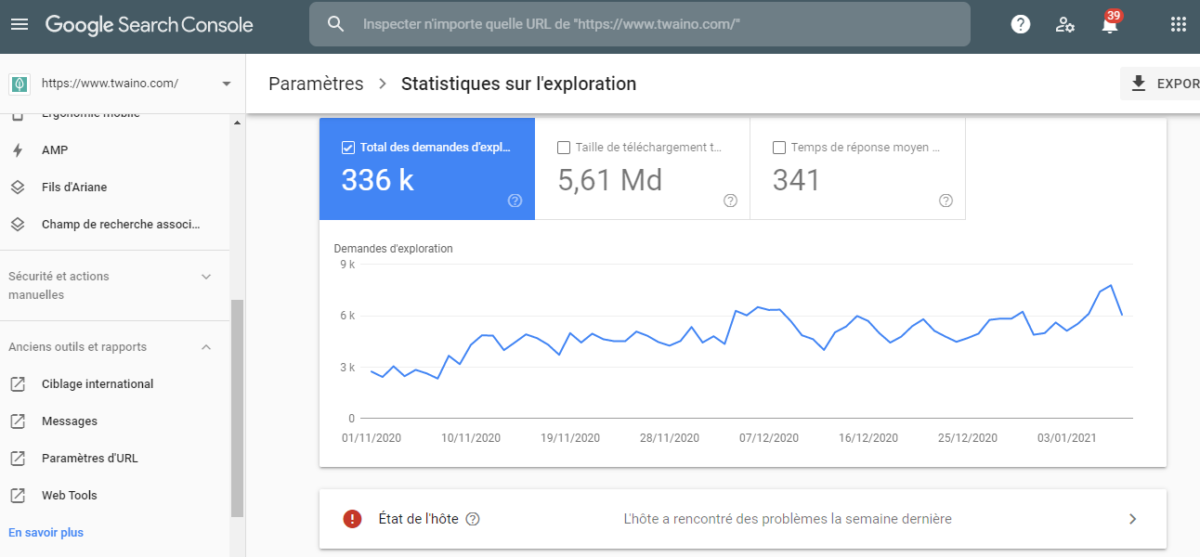

5.1. Google Search Console

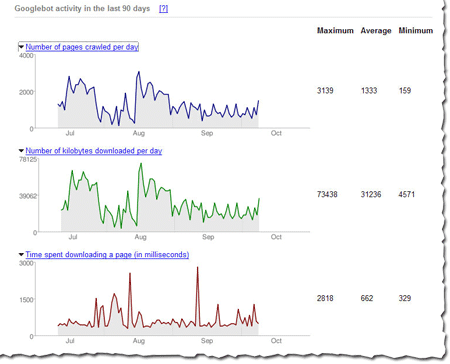

GSC is a tool proposed by Google that offers several features that allow you to analyze your position in the index and SERPs of the search engine. The tool also offers an overview of your crawl budget

To start the audit, you can access the report ” Crawl Statistics “. This is a report that traces the activity of googlebot on your site over the last 30 days

On this report, we can see that Googlebot crawls daily an average of 48 pages on the site. The formula to calculate the average crawl budget for this site would be

BC = Average number of pages crawled per day x 30 days

BC = 48 x 30

BC = 1440

So for this site, the average crawl budget is 1440 pages per month

However, it’s important to note that these Google Search Console numbers are a bit of a stretch

But in the end, this is an estimate and not an absolute value of the crawl budget. These numbers just give you an idea of the crawl rate the site is receiving

As a little tip, you can write down this estimate before you start optimizing your crawl budget. Once the process is complete, you can look at the value of this crawl budget again

By comparing the two values, you will better evaluate the success of your optimization strategies

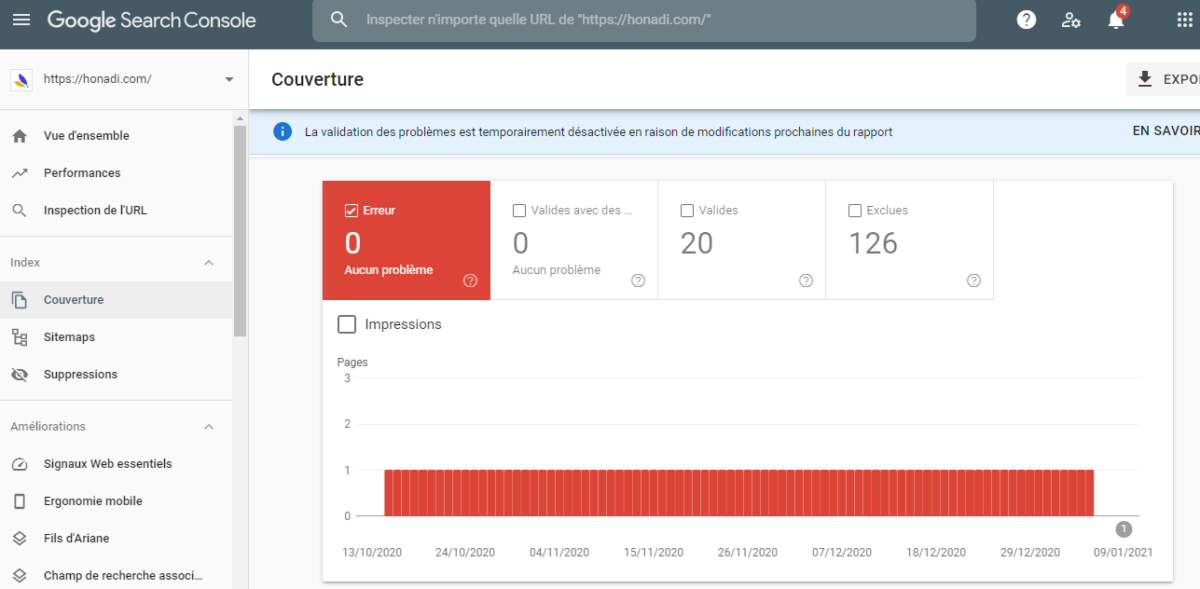

Google Search also offers another report, a “coverage report” to know how many pages have been indexed on the site, as well as those that have been de-indexed.

You can compare the number of indexed pages to the total number of pages on your site to know the real number of pages ignored by Googlebot.

5.2. The server log file

The server log file is undoubtedly one of the most reliable sources to check the crawl budget of a website

Simply because this file faithfully traces the history of the activities of the indexing robots that crawl a site. In this log file, you also have access to the pages that are regularly crawled and their exact size

Here is an overview of the contents of a log file

11.222.333.44 – – [11 / March / 2020: 11: 01: 28 -0600] “GET https://www.seoclarity.net/blog/keyword-research HTTP / 1.1” 200 182 “-” “Mozilla / 5.0 Chrome / 60.0.3112.113 “

To interpret this piece of code, we’ll say that on March 11, 2020, a user tried to access the page https://www.seoclarity.net/blog/keyword-research from the Google Chrome browser

The code “200” is set to mean that the server did access the file and that the file is 182 bytes in size

Chapter 6: Other questions asked about the Budget Crawl

6.1. What is the crawl budget?

The crawl budget is the number of URLs on a website that a search engine can crawl in a given period of time based on the crawl rate and the crawl demand.

6.2. What are crawl rate and crawl speed limit?

The crawl rate is defined as the number of URLs per second that a search engine can crawl on a site. The speed limit can be defined as the maximum number of URLs that can be crawled without disrupting the experience of a site’s visitors.

6.3. What is crawl demand?

In addition to the crawl rate and crawl speed limits specified by the webmaster, the crawl speed varies from page to page depending on the demand for a specific page.

The most popular pages are likely to be crawled more often than infrequently visited pages, pages that are not updated, or pages that have little value

6.4. Why is the crawl budget limited?

The crawl budget is limited to ensure that a website’s server is not overloaded with too many simultaneous connections or a high demand on server resources, which could negatively impact the experience of website visitors.

6.5. How often does Google crawl a site?

The popularity, crawlability, and structure of a website are all factors that determine how long it will take Google to crawl a site. In general, Googlebot can take more than two weeks to crawl a site. However, this is a projection and some users have claimed to be indexed in less than a day.

6.6. How to set a crawl budget?

- Allow crawling of your important pages for robots;

- Beware of redirect strings;

- Use HTML whenever possible;

- Don’t let HTTP errors consume your crawl budget;

- Take care of your URL parameters;

- Update your sitemap;

- Hreflang tags are vital;

- Etc.

6.7. What is the difference between crawling and indexing?

Crawling and indexing are two different things and this is often misunderstood in the SEO industry. Crawling means that Googlebot examines all the content or code on the page and analyzes it

Indexing on the other hand means that Google has made a copy of the content in its index and the page can appear in Google SERPs.

6.8. Does Noindex save the crawling budget?

The Noindex meta tag does not save your Google crawl budget. This is a pretty obvious SEO question for most of you, but Google’s John Mueller confirmed that using the noindex meta tag on your site will not help you save your crawl budget. This meta tag only prevents the page from being indexed, not crawled.

Conclusion

In short, the crawl budget is the resources that Google reserves for each site for the exploration and indexation of its pages

Even if the crawl budget is not in itself a factor of referencing, its optimization can contribute to the referencing of the pages of the site, especially the most important pages

For this, we have presented throughout this guide a number of practices to take into account

I hope this article has helped you to better understand the concept of budget crawl and especially how to optimize it

If you have any other questions, please let me know in the comments.

Thanks and see you soon!