Cloaking is a Black Hat optimization technique that consists in preparing different contents for the same query. As soon as the request is solicited, a first answer, well optimized, is presented to the search engines and another one, often spammy, is displayed to the Internet users

Have you ever experienced that situation where you make a query on Google and once on one of the first pages, you notice that it has no relation to your search?

If so, you probably thought it was a mistake on Google’s part. But maybe not! You may have been cloaked.

So

- What is cloaking?

- How does it work?

- How can it be used?

- Is it a practice recommended by Google?

Discover a complete definition of the term “cloaking” with a clear, explicit and precise answer to each question

Chapter 1: Cloaking – What is it exactly?

In this first chapter, we are going to break down the word “cloaking” to help you understand it

To do this, we will discuss

- Its general principle

- And its objectives.

1.1. The general principle of cloaking

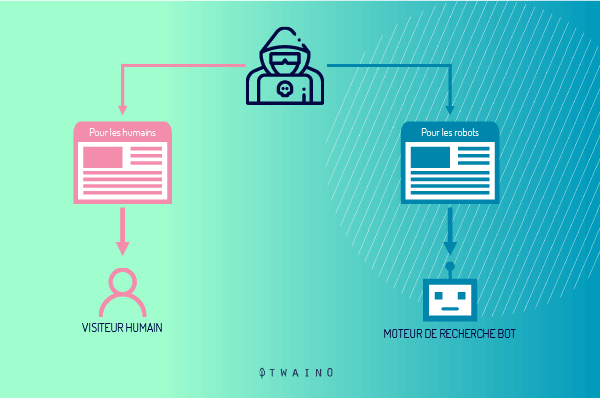

The principle of cloaking consists simply in presenting to two distinct visitors, internet user and crawler of search engines, partially or totally different pages from the same address

This term can also be used when a server is configured to display different contents for the same web page.

1.1.1. What does it mean in concrete terms?

Translated from English, the term “cloaking” means “concealment”, “camouflage” or “hiding”

In other words, we can say that it is a set of maneuvers that allows to hide from theindexing robots the real web page that the user will see on his screen, by submitting in parallel a different page, expressly optimized for the robots.

It is a way to arrange the rendering of a web page according to the type of Internet user who comes to consult the information.

As a result, the content of the URL proposed at the request of the Internet user will not be the same as the one presented to the indexing robots, in particular to Googlebot and Bingbot from Microsoft.

This content will then be available in two versions

- A well optimized version reserved for search engines

- Another one with visuals and reserved for cybernauts.

1.1.2. How was cloaking born?

It is well known that the insertion of multimedia elements such as images or videos allows to hydra reinforce the general quality of an article and to optimize it for the SEO.

In addition to their importance in organic SEO, multimedia content enhances the user experience. Most Internet users easily understand visuals and are more inclined to consult them rather than read text blocks

On the other hand, a point that seems less obvious and that you probably don’t know, is that these multimedia contents (graphics, videos or flash animations) make the web pages that contain them, a bit difficult to understand for the robots

Indeed, although search engines are quite talented, they can not yet interpret 100% an image or a video

Therefore, they rely on the content of specific tags (alt or title) to understand the theme of these visuals

For many years now, these tags, which are specific to multimedia content and which Internet users do not see, are taken into account by search engines during indexing.

This is where cloaking comes into play!

The description of the multimedia content is then presented in the form of textual content to the indexing robots of the search engines, which can read it in its entirety without any difficulty.

This was the little story about cloaking to understand its origin.

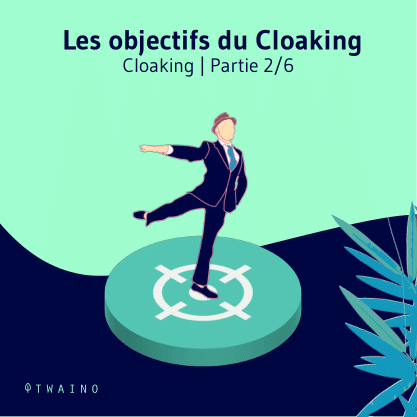

1.2. The objectives of cloaking

With cloaking, it is possible to increase your rank in the SERPs, but by being dishonest

And the fault is there: Luring the search engines in order to obtain a better place in the SERPs underhandedly

Generally used for SEO purposes, cloaking is a technique that serves decidedly well in Black Hat, where it allows to dishonestly improve the position of websites in the SERPs

But when you think about it, the purpose of cloaking is not only limited to deception!

There are also other reasons, ethical this time, to use cloaking

In this series of good intentions, we can mention

- The desire to present users with an attractive and well-illustrated web page with videos developed in their native language if possible

- Protecting the access of a web page to the mail collecting robots;

- The presentation of targeted advertisements

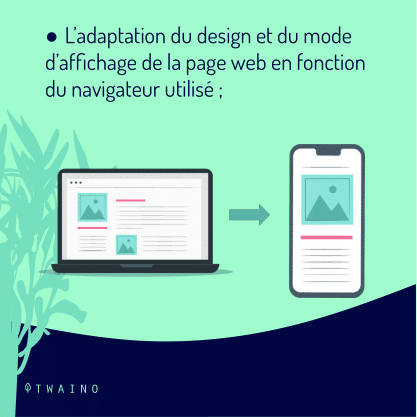

- The adaptation of the design and the display mode of the web page according to the browser used (Mozilla Firefox, Chrome, Explorer, as an example) example);

- And many others.

In summary, cloaking is one of those multi-faceted practices, whose objectives vary depending on your intentions and the uses you make of it

With that in mind, how does it technically work?

Chapter 2: How does cloaking technically work?

In this second chapter, I will first cover the basics of how cloaking works and then talk about its many variations

2.1. The basics of how the cloaking technique works

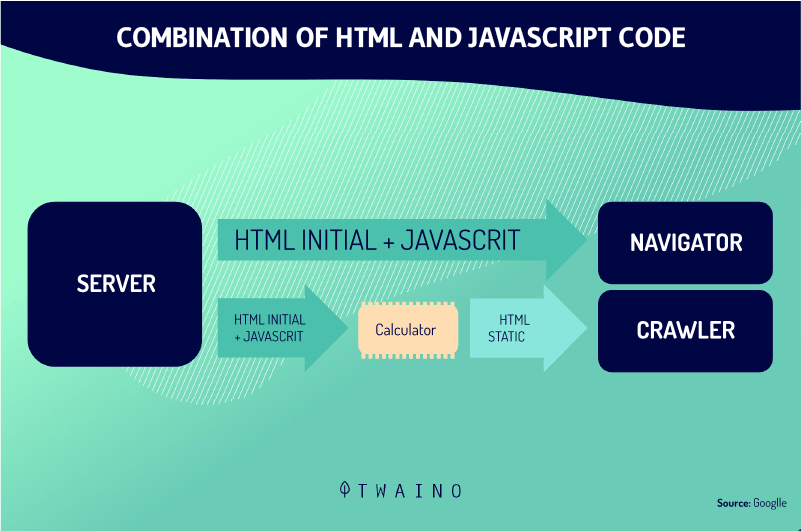

As a reminder, cloaking allows to present a page to the users and another one optimized enough to please Google’s robots.

But how to explain this little game of sleight of hand?

Well, it all comes down to the identification of a certain number of parameters such as

- The user agent

- The host ;

- The IP address

- And many others

Depending on the parameter that serves as a support, the type of cloaking can be different

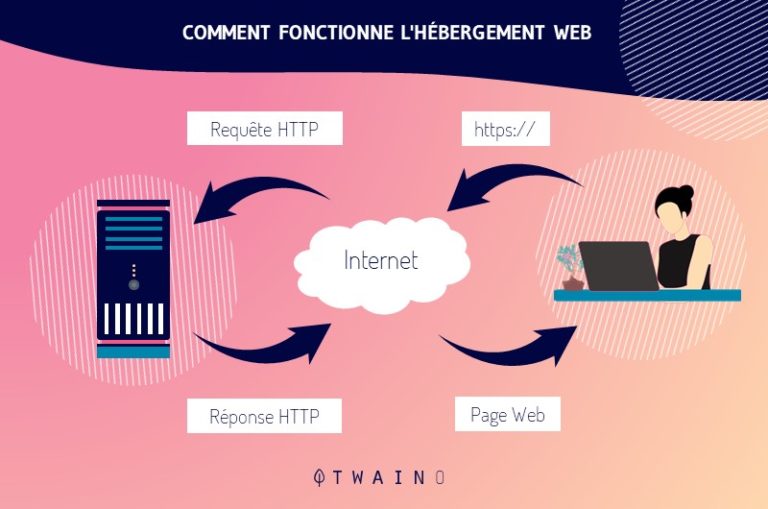

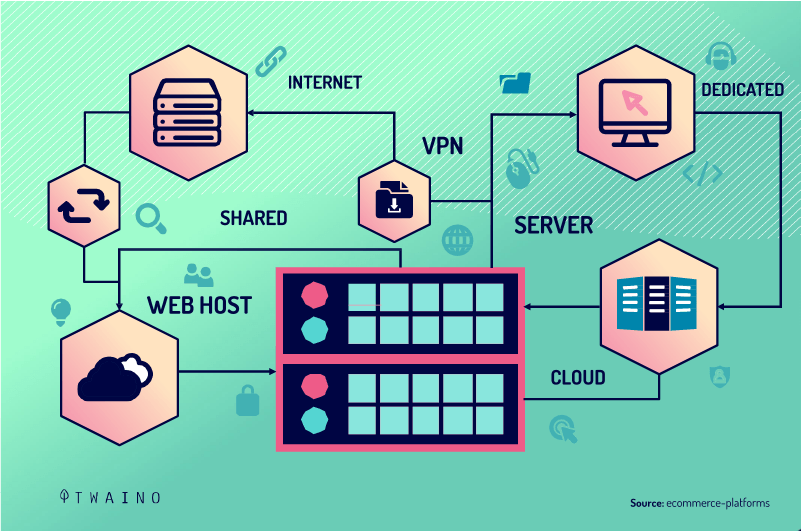

When a user visits a website, his browser systematically sends a request to the server hosting the site

This request is made possible thanks to the HTTP (Hypertext Transfer Protocol) which provides many elements about the user, including the identification parameters mentioned above.

These parameters allow the server hosting the website to identify and distinguish the various types of visitors to the website. Once the server recognizes the presence of a visitor, it automatically adapts the content of the site for that type of visitor

The server is programmed in such a way that when it realizes that the visitor is not a human Internet user and that it is rather an indexing robot, the script (in PHP server language, ASP…) that is proposed changes and is different from that of the official page, reserved for human visitors.

It is easy to understand how the administrators of a site intentionally manage to send you the page of their choice thanks to your identification parameters transmitted during your request

This is the same scenario that is also used for search engines.

A general practice in principle, but which is unique in its forms.

The actual functioning of cloaking will be detailed in a specific way in each of its many variations in the following section.

2.2. The multiple variants of the cloaking technique

What are the different types of cloaking?

This is what we will discuss in this section. Here I will discuss each of the most frequently encountered variants, in their form, their particularities and their mechanism.

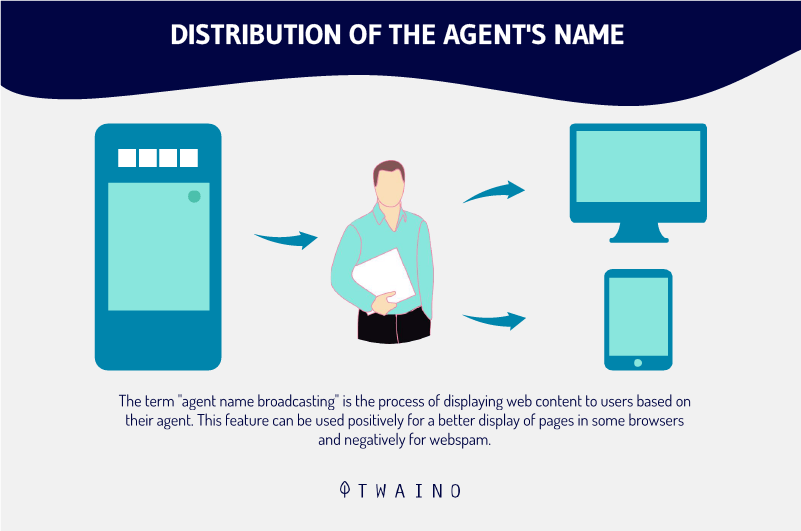

2.2.1. Agent Name Delivery” Cloaking based on a user agent

User agent-based cloaking is by far the most widespread form of the technique. And for good reason, access to the web is usually done via a browser, and a browser means a user agent.

The user agent has therefore become the preferred identification parameter for web site administrators.

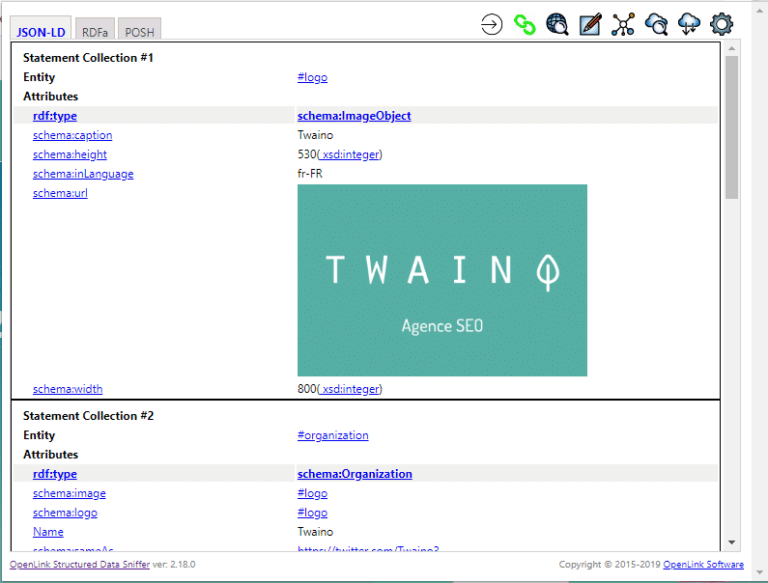

When a human visitor or a robot connects to a website, the user agent is an integral part of the HTTP header (the set of meta-information and real data exchanged via an HTTP request) that is sent to the website server.

Still called agent name, the user agent is actually an identifier, a signature that is specific to all visitors of a website.

Thus, whether it is an internet user who accesses the website from a browser (Safari, Opera, Chrome, Explorer…), or an automated program (spiders, bots, crawlers and any other search engine robot), each has its own specific name agent.

As explained above, each time you visit a website, your browser sends an HTTP request to the web server. In this request, the user agent appears as follows:

USER-AGENT=Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 10.0; WOW64; Trident/6.0)

In our example, the web browser you logged in with is Internet Explorer 10 used on Windows 10 in version 6.0 of its web page display engine.

This aside, the identification of the name agent also allows the server to “cloak” (modulate, hide or adapt) the contents of the web page in question.

The server can also refine the ergonomics of the web page thanks to optimized style sheets (CSS).

The name delivery agent is therefore the basis for cloaking. Yes, it is from it that all the modifications leading to the display of the different contents reserved for each party, the surfer and the robot, will start.

As soon as the browser’s request is received, the site’s server will load (for HTML pages) or generate (for PHP pages) the web page that you have requested in its “humanized” form (texts and images, videos…).

On the other hand, upon receiving a request from a robot, the site’s server will display the web page in its ultra optimized form and filled with textual content for the robots.

The cloaking based on the user agent is a classic. What I didn’t tell you, however, is that this type of cloaking is definitely one of the risky forms of the technique.

Being easily detectable by search engines, it tends to be replaced by other types.

2.2.2. IP Delivery” cloaking on the IP address

Cloaking based on the IP (Internet Protocol) address is a form of cloaking quite similar to the previous one

Here again, it is a matter of classifying site visitors into humans and non-humans (indexing robots) in order to offer them distinct and adapted content.

In contrast to the previous form, this type of cloaking is often used with good intentions

Indeed, this practice is often used in the context of Geotargetting (geolocalization of the Internet user) where it allows the website server to adapt the language of the web pages and/or the advertisements to the visitors of the website according to their region.

Far from being limited to these peaceful uses, IP address-based cloaking is also used in abusive referencing maneuvers. In particular, when website administrators provide content strictly dedicated to the standard IP address known to web crawlers.

The IP address is actually a sequence of numbers (192.153.205.26 for example) available in the logs. The latter are files from an Internet server that contain information such as the date and time of connection, but also the IP address of visitors

This sequence of numbers, which is unique to each device connected to the Internet, provides information on the geographical location and Internet service of users.

It is important to note that web servers are perfectly capable of segmenting their visitors (robots and humans) simply from their IP address.

Cloaking based on IP addresses consists of tracking down an IP address and, depending on the human nature of its owner, modifying the content of the web page accordingly.

However, this variant of the technique has a serious shortcoming. It would only work if the search engine robots used the same IP address for the same site

Fortunately or unfortunately, the vast majority of search engines opt for changing IP addresses

A simple way to avoid being the object of manipulation.

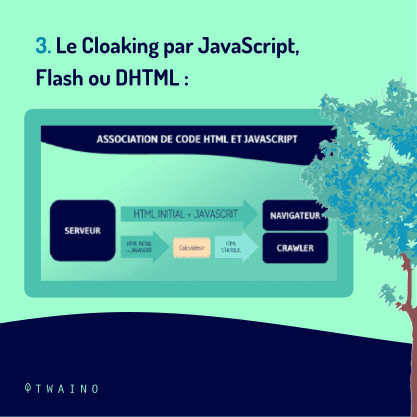

2.2.3. Cloaking with JavaScript, Flash or DHTML

First, let’s note:

- The JavaScript (abbreviated to JS) is a lightweight scripting language created to make web pages dynamic and interactive.

- The DHTML or Dynamic HTML, is the set of programming techniques used to make an HTML web page capable of transforming itself when viewed in the web browser.

- Flash or Adobe Flashformerly known as Macromedia Flash, is a software program for manipulating vector graphics, raster images and ActionScript scripts to create multimedia content for the Web.

It is also worth remembering that, just like the multimedia content used as an example in the previous chapter, search engines also seem to have difficulty identifying and understanding JavaScript, Flash or DHTML code.

This is one of the most difficult limitations for search engines, because it serves as a gateway and indirectly allows cloaking in this form

The cloaker (person practicing cloaking) just has to create a web page full of keywords with a redirection in one of these languages (JS, FLash, DHTML) to a “proper” page

Through his browser that will follow the redirection, the user will access the page stuffed with keywords while the robots will index the “proper” decoy that they will display in their SERP.

It can be said that this variant of the technique is quite simple to establish. Moreover, it is more difficult for search engines to spot since it involves the use of codes that are quite difficult for them to interpret.

This particular form of the technique is the most used by the cloakers. Although easy to implement, cloaking via JS, Flash or DHTML remains pure and simple cheating

Its objectives are clear and it could not be otherwise, it is to deceive search engines and users about the real nature of the web page.

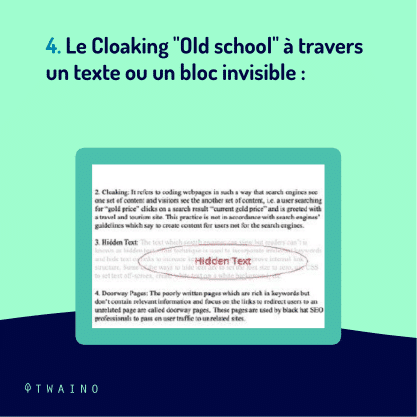

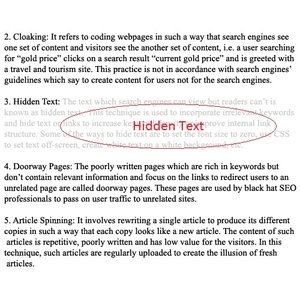

2.2.4. The “Old school” Cloaking through a text or an invisible block

Yes, we go back to the basics. Cloaking through invisible text or block is a bit like an old school practice.

It is indeed the oldest form of its category, the most deceptive and the most risky of cloaking (first of the name). From now on, easily spotted (and sanctioned), it doesn’t even seem to fool users, let alone search engine robots.

Concretely, this variant of the technique simply consists in putting here and there on the web page an invisible content (a white text on a white background, or a black text on a black background or a frame with microscopic dimensions) for the Internet users.

Source Javatpoint

This content, although invisible to the user, will still be considered and valued by some search engines

Thus the invisible text will be a raw listing of keywords or any other element capable of improving the relevance of the web page and therefore its position in the results of search engines.

In addition, it is possible to add the invisible block on a block perfectly visible to the user, with the help of CSS (Cascading Style Sheets)

In the end, a part of the web page (optimized for robots) is hidden from the user.

Well, that was 10 or 15 years ago. Today, this variant of the practice is obsolete and clearly proscribed

The vast majority of search engines no longer play the hide-and-seek game. Even the CSS is accessible to search engine bots, which soon find out about the ruse.

As if that wasn’t enough, the smartest users are also able to uncover the hidden content by simply selecting the suspicious areas of the page with the mouse.

A simple and ingenious technique that has become obsolete and vulnerable.

2.2.5. Other forms of cloaking

Apart from the 4 forms of cloaking mentioned above, there are other forms that we will discuss to complete this section

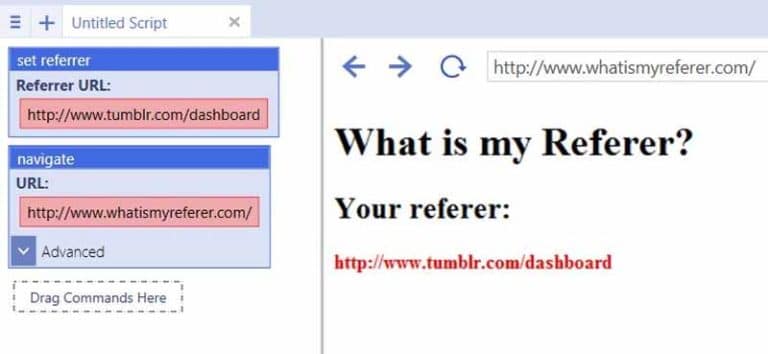

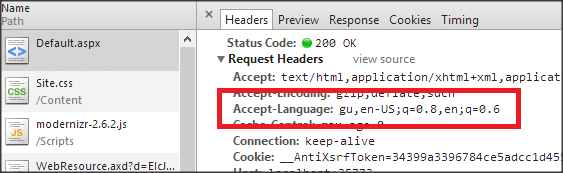

2.2.5.1. Cloaking through HTTP_Referer and HTTP Accept-Language

These two variants are similar in technique. They are based on parameters included in the HTTP header.

The Referer appears as a URL:

Source ubotstudio

It is the complete or partial address of the web page from which the user has launched his request to access the current page where he is. Its syntax is :

REFERRER=https://www.twaino.com/definition/a/above-the-fold/

In this example, the user launched a request from the page “Definition“page of the Twaino.com website to access the page with the definition of “Above the fold”

As for the Accept-Language parameter of the HTTP request, request, it indicates the various languages that the client is able to understand as well as the preferred local variants of this language. The content of Accept-Language does not depend on the user’s will.

Source dotnetexpertguide

Cloaking through HTTP_Referer is really about checking the HTTP_Referer header of the visitor and depending on the identity of the requester, proposing a different version of the web page.

It is the same for cloaking via HTTP Accept-Language. After identification of the user’s HTTP Accept-Language header, according to the matches made, a specific form of the web page will be displayed.

2.2.5.2. Host-based cloaking

Host is also a component of the HTTP header. It provides information on the name of the site on which the page consulted by the Internet user is located.

It is presented as follows:

HOST: www.twaino.com

The purpose here is to directly attack the server from which the user connects to the Internet. It is thus possible to directly identify the different servers of the search engines

More effective and more formidable than the form involving the IP address, this form of cloaking is difficult to detect.

Chapter 3: Some use cases of cloaking

That’s it, we’ve seen cloaking in detail in its operating principles. But what about its use? What are the various use cases of this technique?

In this chapter, I describe some known and relatively accepted applications of this practice. Specific use cases showing cloaking as you know it now, in a new light

3.1. Cloaking in crawl budget optimization

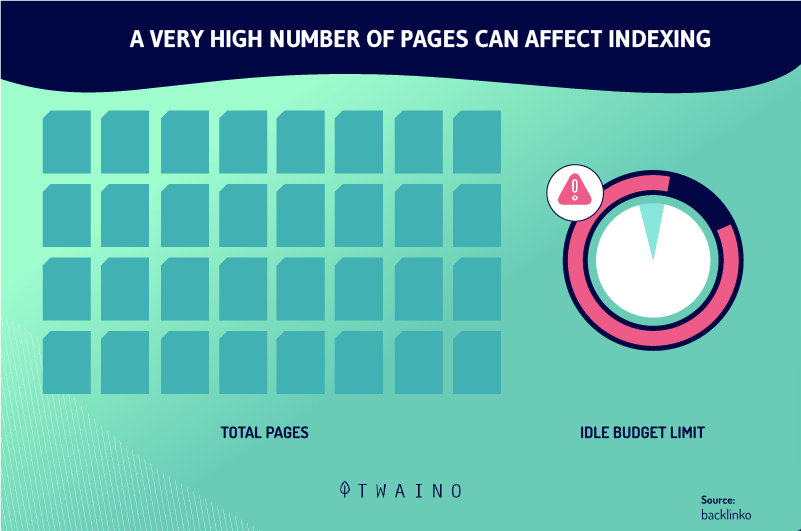

The crawl budget is globally the amount of time that Google allows itself to spend on a website

In practice, it corresponds to the number of pages that the Google bot will explore/crawl on your website according to the time it spends on it

This exploration takes into account a number of criteria

Among others, we can mention

- The speed of the server to respond: The speed of loading the site is also taken into account;

- The depth of the page: That is to say the total number of clicks needed to reach a page of the site from the home page

- Frequency of updates: That is, the quality, uniqueness and relevance of the site’s content;

- And much more.

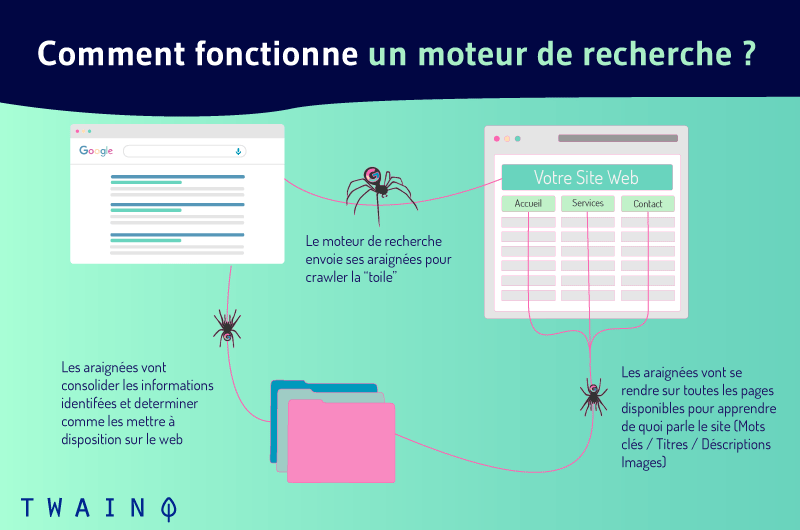

In order to understand the interest of cloaking in improving and increasing the crawl budget of your site, it is important to have a general idea of how crawlers work. What do they actually do on your website?

It is nothing extraordinary. The crawlers are actually programmed to perform a number of tasks after accessing your website

They crawl a large number of URLs for which they index the contents individually

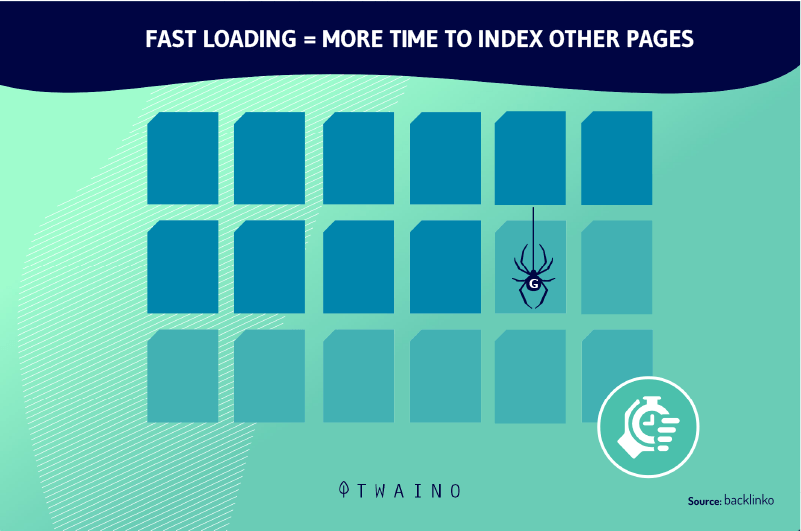

In order to optimize your budget crawl, it is therefore important to ensure that the crawler is not distracted and occupied by irrelevant and/or useless elements.

As a first step, it is advisable to analyze the log files, and then to delete the URLs that are not relevant for your SEO.

Of course, it is better to keep everything that is JavaScript or CSS files in the background so that the bots can concentrate more on your HTML pages.

It is therefore recommended to cloak (hide or redirect) the JS or CSS files in order to reserve the vast majority of your crawl budget for crawling the most important content.

These are all tips to better exploit your crawl budget thanks to cloaking.

3.2. Cloaking in relation to visitor behavior

This use case, it should be noted, is a very particular application of the technique

At first sight, it does not strike the eye and for good reason, it is not really cloaking as we know it, but rather a rather ambiguous form treated rightly or wrongly as cloaking.

To support this usage and make it easier to understand, let’s create a context.

Let’s assume that you have a website on which you have planned an iterative and changing welcome device for visitors coming from Google

That is, a system that receives users in different ways. For example, you set up a block for this purpose at the top of the page, where you propose, depending on the user’s request, a welcome message associated with either :

- A request to subscribe to RSS feeds and/or the newsletter;

- A suggestion of some additional articles to read;

- And many other things.

Here, the fact that the search engine is not entitled to all or part of these proposals while the Internet user has access to them is considered as cloaking.

For Google, the treatment you give to users is certainly not the same as that offered to crawlers.

The same goes for web pages where users are systematically redirected to pages other than those initially found in the Google SERPs

3.3. Cloaking in A/B tests or multivariate tests

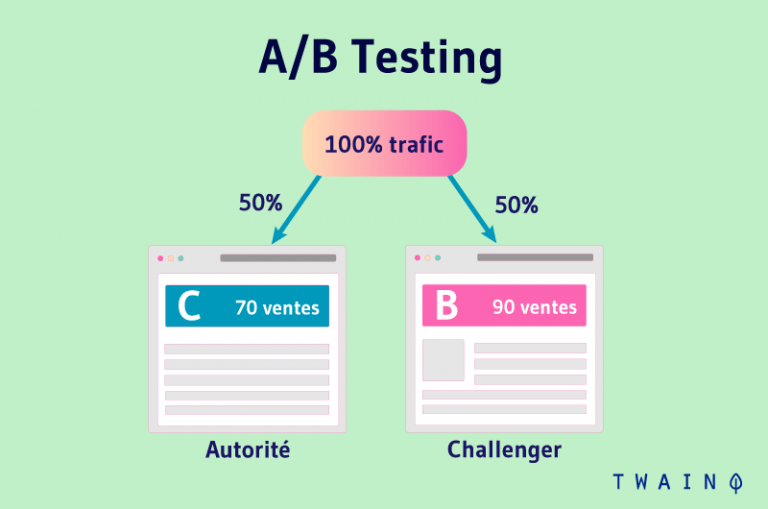

A a/B test or A/B testing is a marketing approach that allows to test several layouts of the components of a web page. To do this, users are offered modified variants of the said web page

For example, the site displays a purchase page A to a visitor 1 and a purchase page B to a visitor 2. This test allows us to evaluate the productivity and performance of the different pages

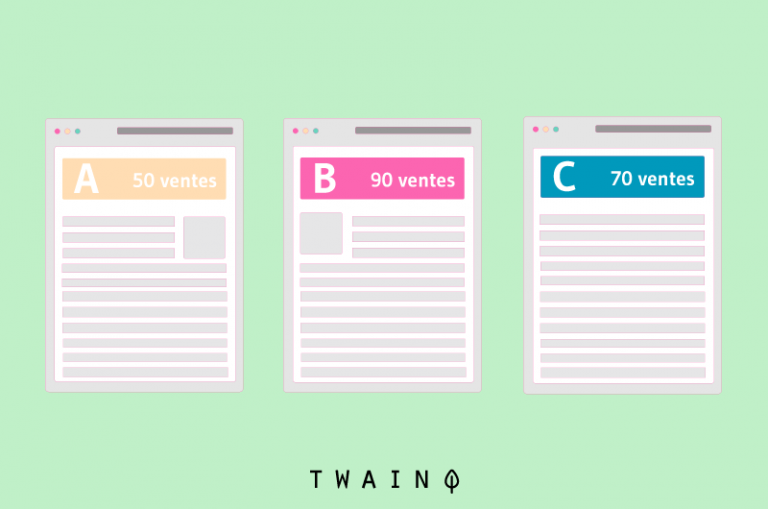

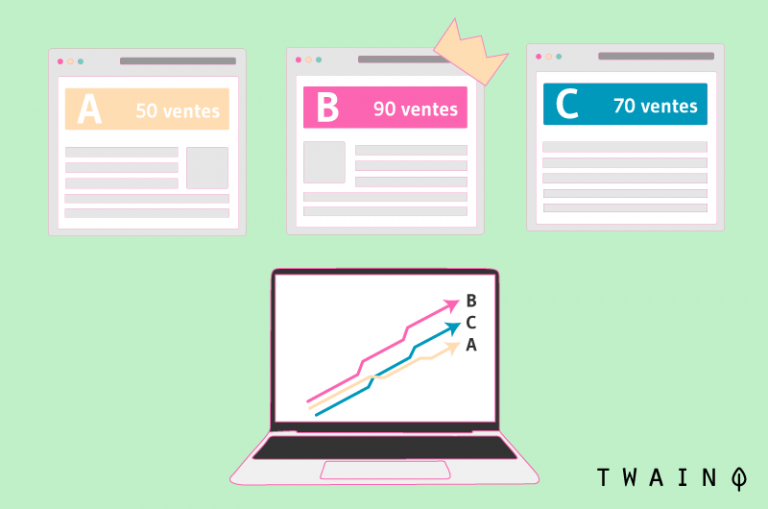

Multivariate testing (MVT) is a derivative of A/B testing. An MVT consists of creating a huge number of versions of a single page that will be sent to several categories of visitors.

For example, by setting up a multivariate test, you will be able to test 3 different headers, 5 different ads and 2 different images

Each combination will be put online randomly, so you will get a total of 3 x 5 x 2 = 30 different variants for the same page. The objective is to define the perfect combination (header + ad + image) for your page.

Don’t worry, A/B or multivariate testing techniques, when applied in a standard way, respect the Google guidelines.

Indeed, Google has carefully and rigorously codified the realization of multivariate tests.

For the search engine, the ethical use of multivariate testing tools is not a form of cloaking

We recommend constructive testing: The optimization of a site’s pages benefits both the advertisers, since the number of conversions increases, and the users, who find effective information.

The search engine also provides information on the general measures to be taken to ensure that A/B or multivariate tests are not considered as cloaking and are treated as such.

Thus, Google recommends that:

- Variants respect the essence of the original page content so that neither the meaning nor the general impression of the users is changed.

- The source code must be updated regularly. Of course, the test must be stopped immediately after sufficient data has been collected.

- The original page should always be the one offered to the majority of users. You can use a site optimizer to briefly run the winning combination at the end of the test, but you must pay attention to the source code of the test page to quickly recognize the winning combination.

The search engine also reserves the right to take action against websites that do not comply with its instructions and those whose users it feels are being abused and/or deceived.

Chapter 4: Cloaking in SEO: A false good idea?

So far, the cloaking technique is now comprehensible to you as a whole. However, some grey areas remain

- Is cloaking a recommended technique in SEO?

- What is its place according to Google?

- Ultimately, is it a recommended technique?

In this chapter, we will try to shed light on all these grey areas specific to the technique.

4.1. Cloaking according to Google: A controversial technique?

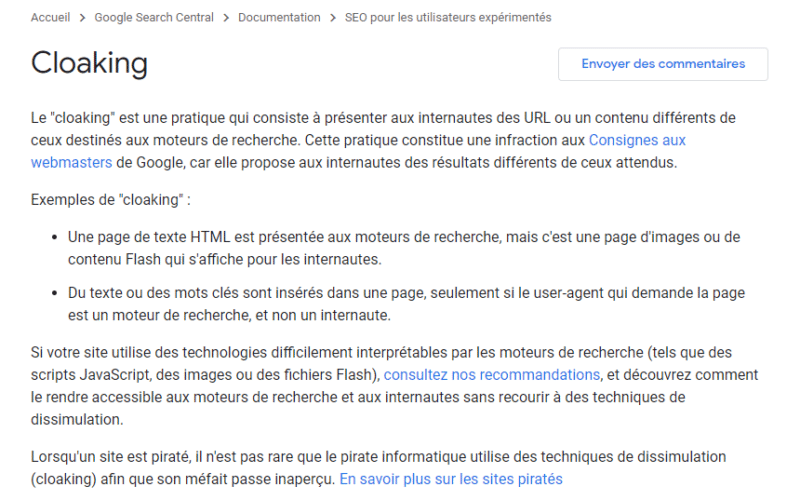

With the search engine, everything is a little clearer, cloaking is inadmissible.

Google officially says

“Cloaking is a technique that consists of offering users different URLs or content than those intended for search engine bots. This practice is a violation of the guidelines google’s guidelines, as it provides users with different results from those expected.”

4.2 Cloaking: SEO White or Black Hat?

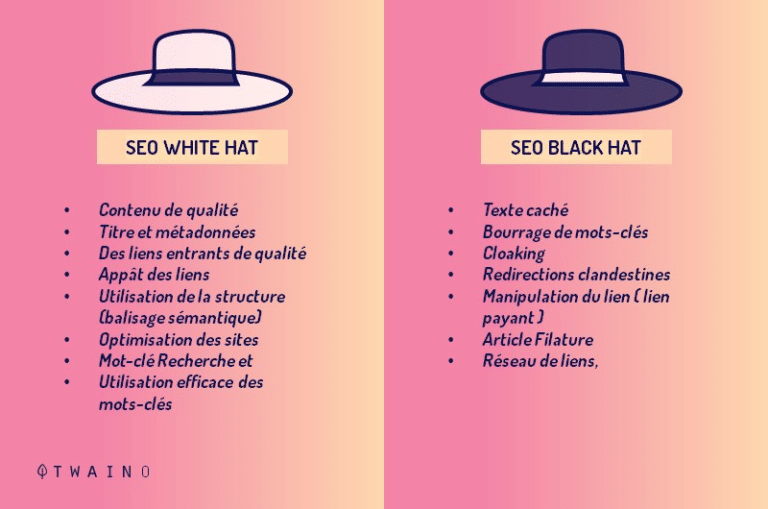

Let’s do a little reminder. In terms of natural referencing (SEO), there are generally two main families of practices: white hat SEO and black hat SEO.

As you can imagine, white hat refers to all good SEO practices as opposed to black hat which refers to all the dubious and prohibited SEO practices.

In fact, white hat SEO is the respect of the directives of the search engines (mainly Google) in all transparency in the optimization of websites

Here the quality, relevance and added value of the content are the cornerstone of any good organic SEO

On the other hand, black hat SEO consists globally in luring, deceiving and diverting the algorithms of search engines for one and the same reason: to obtain a better positioning in the rankings

Almost all the means used in black hat SEO are prohibited (techniques formally banned by search engines).

What about cloaking?

As we have seen, the main principle of cloaking is to present different content depending on the type of visitor (a human user or a robot). Most of the time, part of the content is hidden either from the users or from the indexing robots

This cloaking tactic is clearly considered as black hat.

Ultimately, cloaking is an unethical black hat method, contrary to the Google guideline and duly prohibited. It aims to quickly and fraudulently obtain a better position in the SERPs.

Nevertheless, not all techniques and uses of cloaking are malicious, you know that!

4.3. Cloaking: Is it really a good practice?

In view of all that has been previously developed, the logical and justified answer would be: NO!

Cloaking in addition to its various uses can also help to gain places in the SERPs

To protect themselves from cloaking, Google and the other search engines take severe measures

- Penalties

- Cloaked crawlers

- Whistleblower system

- Webspam Team

- Etc

The algorithmic and manual sanctions of the search engine are particularly formidable.

They concern all or part of the website and can go as far as the permanent removal of the site from the Google index. And for good reason, in 2006 some (cloaked) sites of the BMW brand have been the victims.

In the hands of experts and professionals, applied in accordance with the prescriptions of search engines and with good intentions, cloaking has serious advantages.

Chapter 5: Other questions about cloaking

5.1. What is cloaking?

Cloaking is a poor search engine optimization technique that consists of presenting different content or URLs to human users and search engines. This practice is considered a violation of Google’s guidelines, as it provides visitors with different results than they expected.

5.2. Is cloaking illegal?

The cloaking is considered as a Black Hat SEO practice, therefore illegal (according to the law ” Google ”). The search engine can punish or even ban permanently from its index any site that engages in this practice.

5.3. What are the different types of cloaking ?

We have the cloaking :

- “Agent Name Delivery” focused on a user agent;

- “IP Delivery” based on the IP address;

- By JavaScript, Flash or DHTML;

- “Old school” through a text or an invisible block;

- Through HTTP_Referer and HTTP Accept-Language;

- Host-based.

5.4. What is cloaking on Facebook?

Cloaking is a technique used to bypass Facebook’s review processes in order to show users content that has not met the standards of the community guidelines

5.5. How do I check for cloaking?

In the cloaking checkertool, enter the URL of the website you want to check. Click the “Check cloaking” button, and it will test the URL. If it detects cloaking or not, it will appear in the results. If you find cloaking on your website, you should be concerned, as your website may be penalized by search engines.

In summary

Cloaking is a technique not recommended by Google and its application is not without consequences.

Thanks to this article, you know enough about this practice and its multiple aspects. It is now up to you to make your own opinion and/or experience on the subject.

If you know of other cloaking techniques or applications, don’t hesitate to share them with us in the comments.

See you soon!