DUST or Different URLs with Similar Text is when multiple URLs on a site link to a page on the same site. This is often content that is accessible from various locations on the same domain or through various means. Also known as duplicate URLs, DUST confuses search engines when crawlers must crawl and index duplicate URLs. DUST reduces crawling efficiency and dilutes the authority of the main page.

Content creation is the primary responsibility of webmasters when it comes to SEO. Although the ideal is to create unique content, it is difficult to achieve this without counting the content scrapers that will come and duplicate yours.

Duplicate content accounts for 25-30% of web content and not all of it is the result of dishonest intent. They can also be created unintentionally on a site without the webmasters realizing it.

This is the case of Different URLs with Similar Text (DUST) where a URL can be duplicated hundreds of times. The vast majority of websites are facing this problem and its causes are numerous.

But concretely ;

- What is DUST?

- How do DUST URLs appear on a site?

- What are the potential problems caused by DUST?

- How to correct the problems related to DUST?

In this article, I will provide explicit answers to these questions in order to demystify DUST. We will also discover how to prevent this problem from occurring on a site.

So follow along!

Chapter 1: What is DUST and what problems does it pose in terms of SEO?

Duplicate content is one of the top 5 SEO problems that sites face and DUST is one form of this type of content among many others.

This chapter discusses the definition of DUST and the SEO problems it can cause a site.

1.1. DUST : What is it ?

Duplicate content is common on the web and Google defines it as content from the same site or from other sites that correspond to another content or that are almost similar.

Through this definition, we note that duplicate content occurs at two levels: on the same domain or between several domains.

When it occurs on the same site, it can be in the form of content that appears in different pages of a domain or a page that is accessible at multiple URLs.

In the latter case, duplicate content is called DUST. While other forms of duplicate content are related to content creation, DUST is more the result of URL duplication.

This URL duplication is very common and often comes from a technical problem that all websites may face at some point. The following URLs all refer to the same site and are an example of DUST.

- www.votresite.com/ ;

- yoursite.com;

- http://votresite.com ;

- http://votresite.com/ ;

- https://www.votresite.com ;

- https://votresite.com.

Although these URLs appear to be the same and target the same page, the syntaxes are not the same and this can cause problems for a web site

1.2. Why is DUST or duplicate URL a problem?

Unlike other forms of duplicate content, the DUST does not directly harm the SEO of a website. Indeed, Google understands perfectly that this duplication is not malicious.

However, DUST poses three main problems with search engines. They can’t:

- Identify which URLs to include or exclude from their index;

- Know the URL to which to assign link metrics such as trust, authority, fairness..

- Identify which URLs they should rank for search results.

To understand these issues, let’s look at how search engines work. They manage to display the search results thanks to three main functions:

- Exploration ;

- Indexing;

- Ranking.

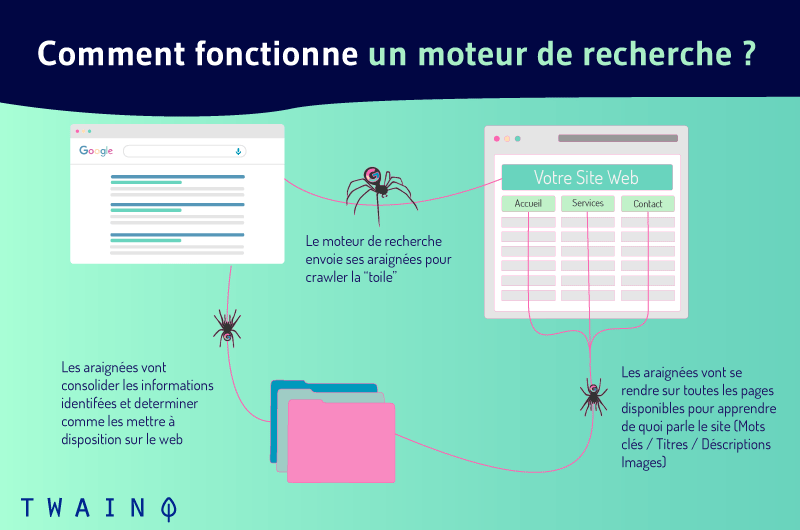

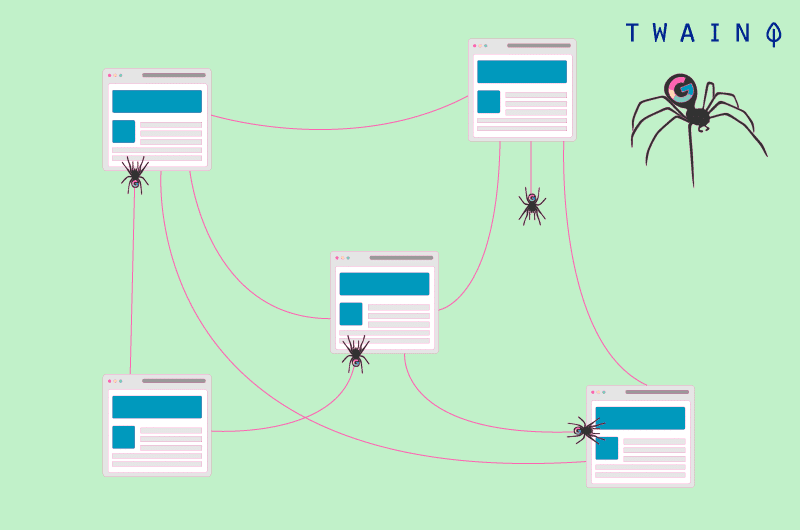

Web crawling is the process by which search engines discover new and recently updated web content. They send out robots called spiders that move from URL to URL on a site.

When multiple URLs lead to the same page, the spiders consider them as different URLs and crawl each one. They then discover that the contents of these different URLs are the same and treat them as duplicate content.

During the crawl, the bots are able to add the new interesting resources they find to the index, a huge database of URLs.

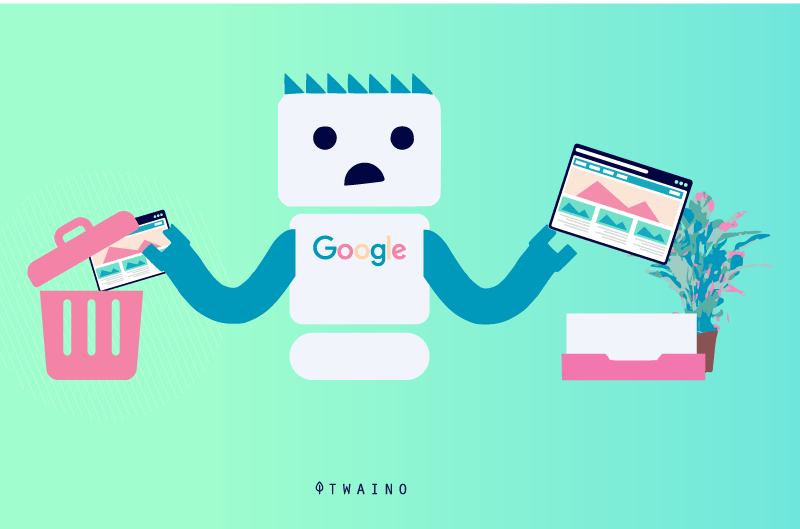

Although this can happen, spiders avoid indexing the same page multiple times and focus on indexing only those pages that offer unique content. Thus, spiders may have to make choices about which URLs to index.

They may include in the index any URL that may not be the right one or the one you want to index.

Moreover, it is among the indexed contents that the search engines choose the relevant contents when an Internet user makes a request. It is therefore obvious that a page that does not exist in the index cannot appear in the search results.

1.3. how can DUST hurt your SEO?

Duplicate URLs can harm a site’s SEO in several ways.

1.3.1. Reduce crawl efficiency

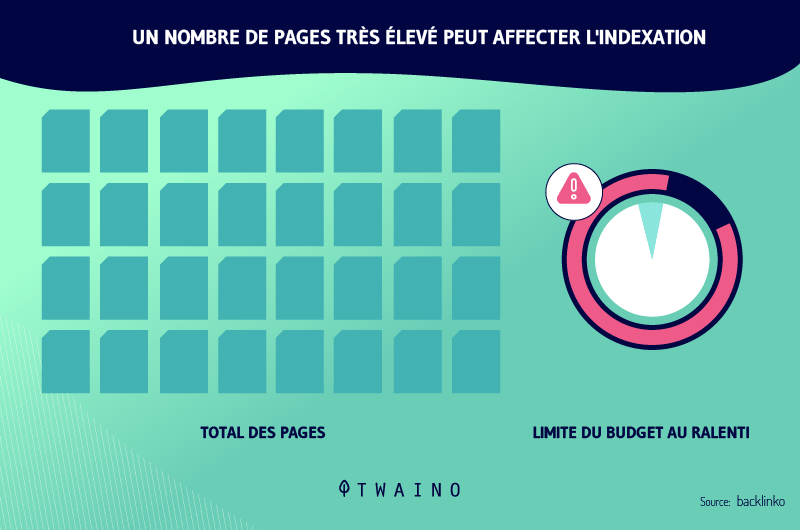

The fact that duplicate URLs all point to the same page and that the robots will still crawl each of them can reduce the efficiency of the robots’ crawl.

Indeed, Google allocates a crawling budget to all sites. This budget represents the number of URLs that crawlers can follow on a site. The more URLs there are, the more likely the crawl budget will be used up.

That said, crawlers will not be able to crawl all pages when the budget is exhausted and may not be able to crawl all relevant pages on the site.

It is therefore crucial that site owners work to optimize the crawl budget to allow the crawlers to follow all relevant URLs.

1.3.2. Loss of traffic and dilution of equity

When search engines index a URL with a strange structure among duplicate URLs, users may have doubts when it appears in search results. This can lead to a loss of traffic even though it is well ranked.

It can also happen that robots index several URLs among the duplicates. Even in this case, the URLs are considered differently. Each of them gets different authorities and rankings.

This dilutes the visibility of all duplicates. This is because each of the duplicate URLs may capture backlinks while these links may all point to the main URL.

1.4. How do duplicate URLs appear?

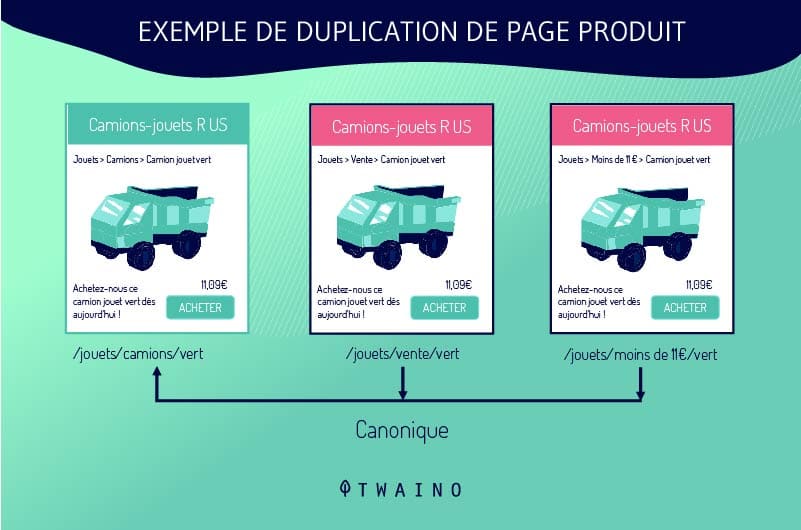

Webmasters do not duplicate URLs intentionally. DUST often occurs when sites use a system that creates multiple versions of the same page. This situation is common on e-commerce sites, especially on product sheets.

Product sheets are configured in a way that allows customers to choose between several sizes or colors while remaining on the same page. The content management system therefore generates several DUST URLs that link to a single page.

For example, if a site offers a blue shirt in three different sizes, the system can generate the following URLs:

- shoponline.com/blue-shirt-A ;

- shoponline.com/blueshirt-B;

- shoponline.com/blueblueblue-C.

Duplicate URLs are also the result of filters that sites offer to facilitate the search for their visitors. The different combinations all generate DUST links that search engines unfortunately view differently.

Sites also create duplicate URLs when they publish a printable version of a page. Similarly, duplicate URLs also come from:

- Dynamic URLs;

- Old and forgotten versions of a page;

- Session IDs.

Also note that URLs are sensitive to the presence or absence of the slash (/) at the end. For example, Google will treat example.com/page/ and example.com/page differently.

Thus, these two URLs will be treated as duplicate URLs even though they access the same content.

Chapter 2: How to identify DUST URLs and how to correct them

Correcting DUST URLs can have significant effects on the SEO of a website. In this chapter we will discover how to detect these URLs and the different ways in which they can be treated.

2.1. How to find duplicate URLs?

It is essential to detect DUST URLs in order to correct this problem. Fortunately, there are tips and online tools that allow you to check for duplicate URLs on a domain.

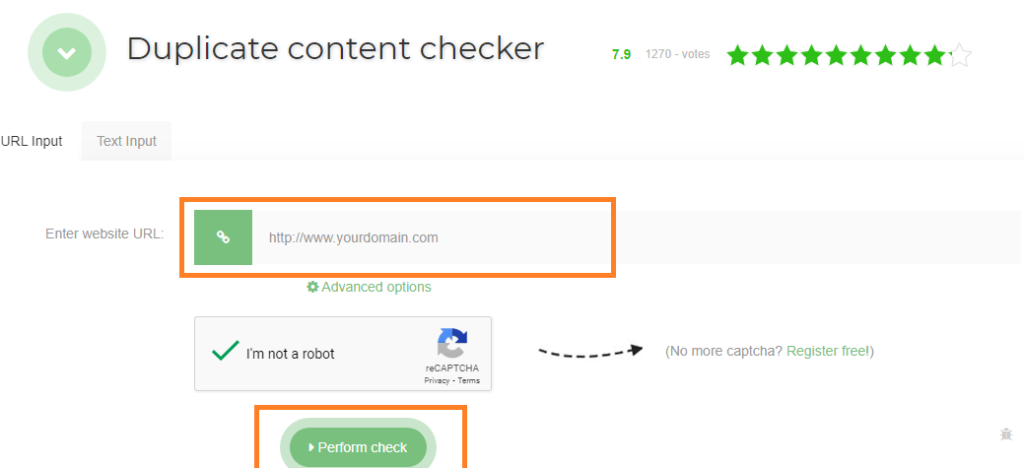

2.1.1. Duplicate URL verification tools

Among the duplicate URL verification tools, I suggest Seo Review Tools. You just have to enter the targeted URL in the bar reserved for this purpose and click on “Perform the verification”. The tool will show you the number of duplicates of the URL you entered.

Siteliner is also a powerful tool for finding duplicate URLs on a domain. It examines your domain and shows you a report of duplicate links on your site.

To use this tool, all you have to do is enter your domain in the search bar. When Siteliner shows you the analysis of your site, click on “content duplicate” to display the duplicate URLs.

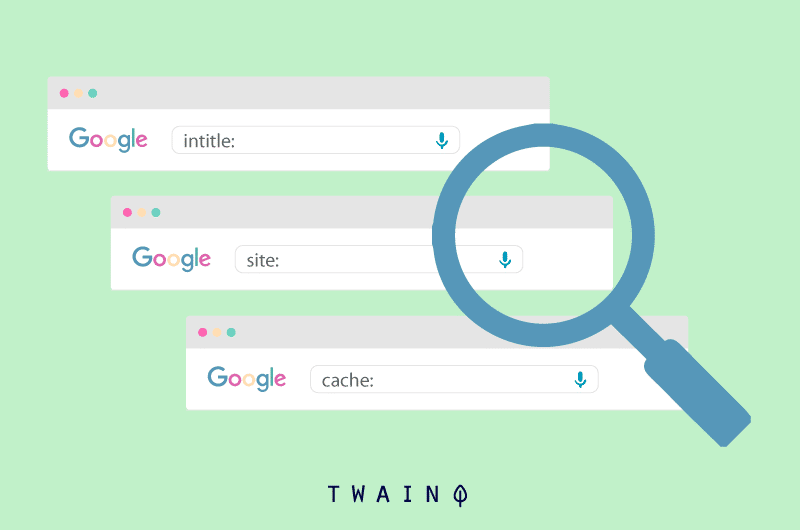

2.1.2. Using the search operator site:example.com intitle :

You can also check your site’s duplicate URLs using the search operators site:example.com intitle: “your keyword”. Google will show you the URLs on your domain (example.com) that contain the targeted keyword.

All you have to do is look at the URLs displayed by Google to see the URLs that link to the same page. Now that you can find duplicate URLs on your site, let’s move on to the corrective actions you can take.

2.2 How to correct DUST URLs?

2.2.1. The 301 redirect

The 301 redirect is a way to send users and search engines to a different address than what they are permanently asking for. In the case of DUST URLs, it consists of redirecting the duplicates to the original URL.

In this way, these different URLs that are likely to be ranked differently are grouped into one and stop competing with each other. In addition, all URLs pass their entire equipment to the main URL.

This URL thus benefits from the referencing power of its duplicates. This will naturally have a significant impact on the referencing of the page concerned. Note however that the 301 redirection does not remove the duplicates of DUST.

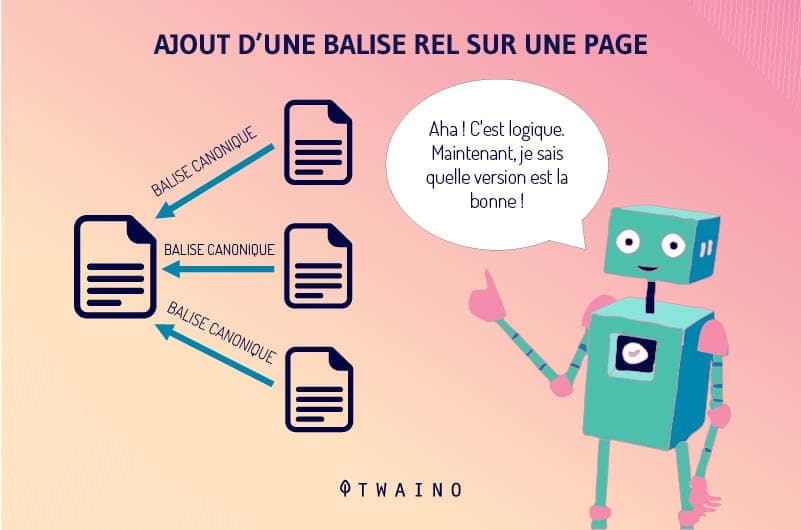

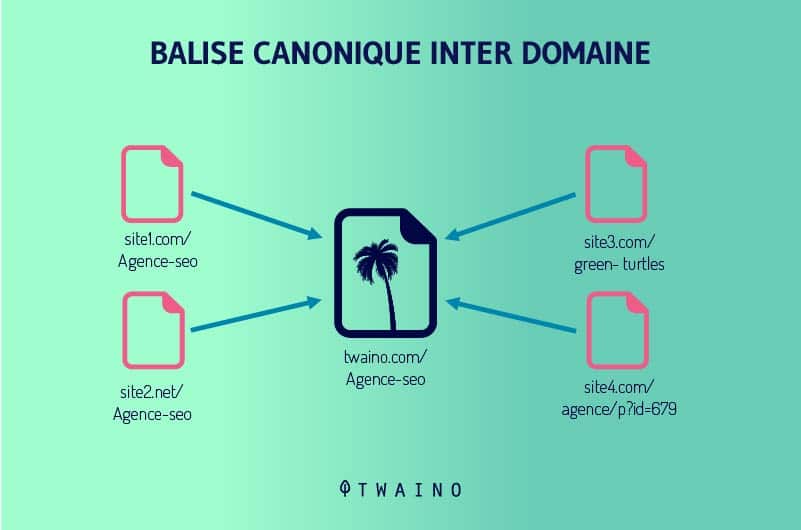

2.2.2. The rel=canonical tag

The rel=canonical tag remains one of the best ways to transfer the referencing potential of a duplicated page to another page. It is an HTML tag that simply tells search engines the URL they should add to their indexes.

Google defines the canonical URL as the most representative URL of a group of duplicate content. This URL is the one that crawlers will follow regularly in order to optimize the crawling budget of a site. The others will be consulted less frequently.

Google and other search engines attribute all the SEO potential of duplicate URLs to the canonical URL. The rel=canonical tag is added to the header of an HTML page.

The search engines consider the other pages as duplicates of the canonical URL and do not make them disappear. This is very advantageous as you certainly do not want all duplicates to be removed.

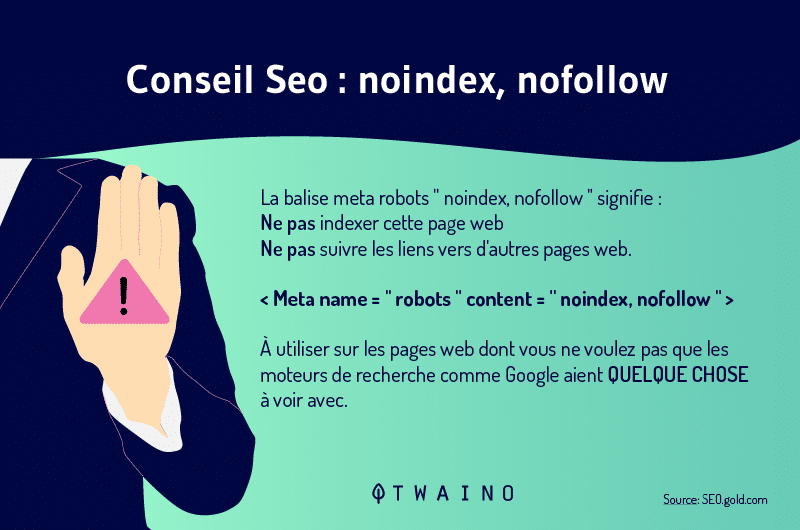

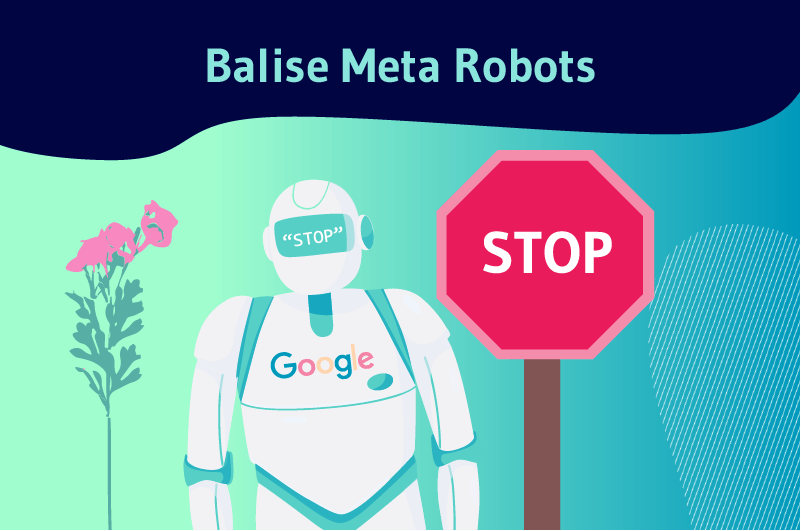

2.2.3. Use of meta robots

The use of meta robots is both simple and effective when it comes to preventing pages from appearing in search results. It consists in adding to the HTML code of a page the Noindex and Nofollow tags.

The Noindex tag indicates to search engines that you do not want them to add a URL to their indexes and consequently not to display it in the search results.

You just need to add the Noindex tag to duplicate URLs to prevent them from competing with the main URL.

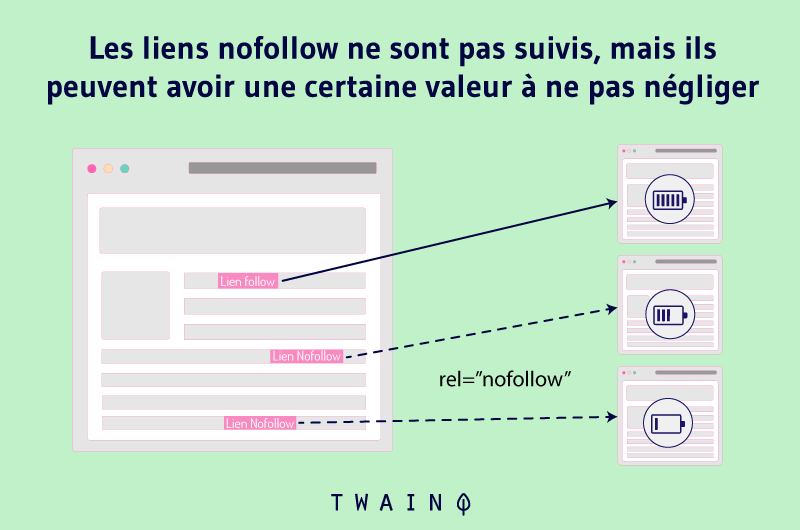

The Nofollow tag tells Google not to follow a link and not to transmit its authority to the pages it links to. Thus, the spiders will ignore all links that include the Nofollow tag.

Adding this tag to duplicate URLs can therefore help you correct problems related to URL duplication.

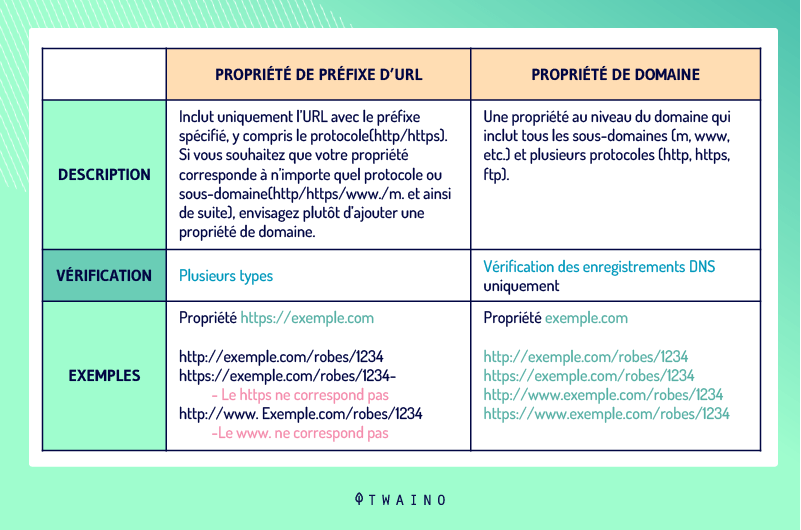

2.2.4. Adjustment in Google Search Console

Inserting canonical tags and robot metas to hundreds of DUST pages is often a lot of work. Fortunately, Google Search Console settings can be part of the solution to DUST URLs.

2.2.4.1. Setting the URL version of your site

Defining a domain’s address can also help solve DUST issues. Webmasters can set a preference for their domain in this tool and specify whether spiders should crawl certain URLs.

Instead ofhttp://www.votresite.com, they can specify that itis http://votresite.com the address of their domain. Note the absence of www and the same is true for HTTP and HTTPS protocols with the absence of S.

To proceed with the definition of the version of the URL of your site, go to Google Search Console and choose “Add property”

2.2.4.2. Managing URL parameters

The configuration of URL parameters in Google Search Console allows you to indicate which URL parameters it should ignore. However, you must be careful not to add incorrect URLs that could damage your site.

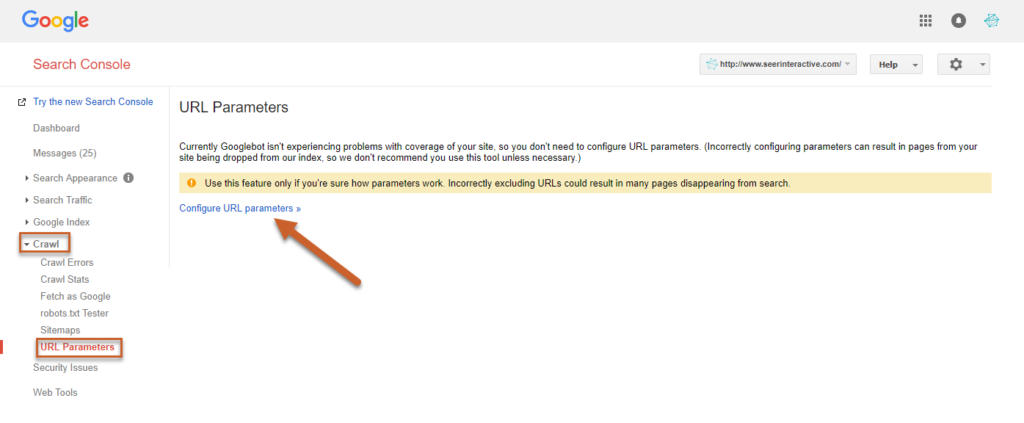

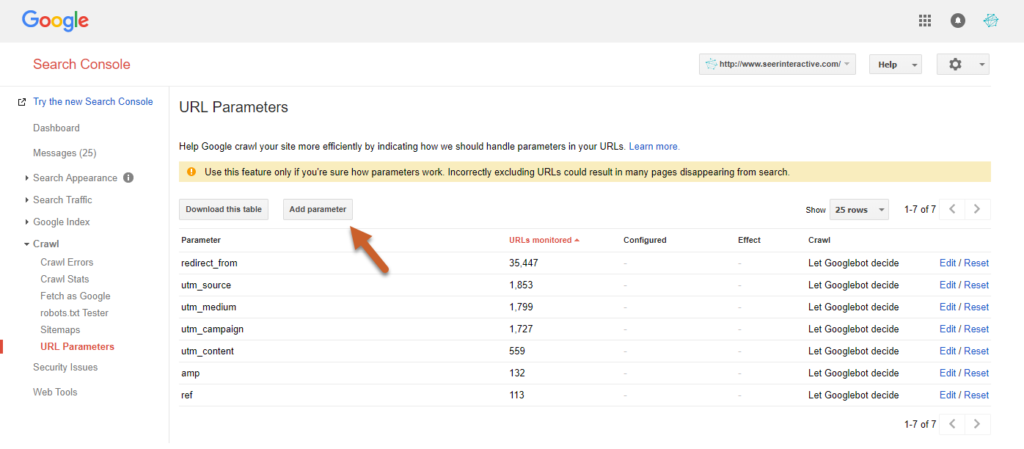

To do this, click on Crawl in the tool and click on “Configure URL Settings”. The “Configure URL Settings” button allows you to enter your settings.

Source : seerinteractive

Source : seerinteractive

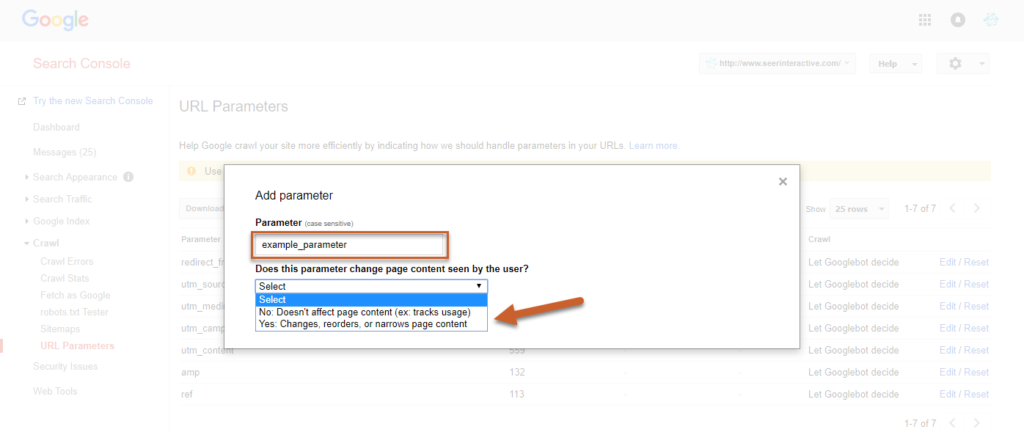

In order to save the setting, select whether or not the setting changes the way the content will be seen by users

Source: seerinteractive

At this point, choose YES to define how the parameter can affect the content.

2.2.4.3. Declaring passive URLs

URL parameter management also allows you to set certain URLs as passive so that Googlebots ignore them while crawling a site.

This is probably the most effective technique to remove cluttering URLs from search results.

It is recommended to apply this technique temporarily while your team can add canonical tags and set up 301 redirection for duplicates.

To declare some of your URLs passive, you will only need to follow the same steps and click NO before saving. As a result, they will no longer appear on the SERPs. However, this technique is not without its drawbacks.

Unlike the canonical tag, the main URL that is kept after the duplicates are defined in the Google Search Console settings does not benefit from any advantage of the latter in terms of SEO.

However, problems do not arise when the passive marked URLs are new or have very little page authority. Therefore, we suggest you check the authority of the pages you want to add in the settings.

You can use for example the Ahrefs verification tool to do this. When the authority of a page is high, you should not mark its URL as passive or you will lose valuable SEO benefits.

Chapter 3: Other frequently asked questions about DUST

This chapter is dedicated to questions that are often asked about DUST URLs.

3.1) How to avoid duplicate URLs?

While there are some DUST URLs that are unavoidable in some cases, many of the duplicates can be avoided. Webmasters should always use a consistent URL format for all internal links on their domains.

For example, a webmaster should define the canonical version of his web site and make sure that everyone who adds internal links to his web site follows this format.

When the canonical version of the site in question is www.example.com/, all internal links must go tohttp://www.example.com/ rather thanhttp://example.com/. The fundamental difference is the presence of (www) in the canonical version of the site.

3.2. Can all of the above strategies be used for DUST at the same time?

In reality, there is no single solution for dealing with duplicate URLs. The solutions mentioned above have their advantages and disadvantages. For example, 301 redirection can reduce the efficiency of crawling.

It is the same principle for the canonical tag. Indeed, the robots still explore the duplicated URLs in order to identify the canonical URL to index it. As for the meta robots, they do not transmit the authority of the duplicated URLs to the original URL.

Regarding the declaration of passive URLs in the Google Search Console settings, it is specific to Google and does not affect other search engines.

Therefore, the problem persists on other search engines such as Bing if it is no longer the case for Google.

In view of all this, it is reasonable to try to use all of these solutions in an appropriate way to cover all potential problems. However, you need to analyze each of these solutions to determine which ones best suit your needs.

3.3. What is a dynamic URL

The use of dynamic URLs is one of the main causes of DUST. In general, the dynamic URL is generated from a database when a user submits a search query.

The contents of the page do not change when a user wants to choose a color of an item on an online store for example. Dynamic URLs often contain characters like: ?, &, %, +, =, $.

In contrast, static URLs do not change and do not contain any URL parameters. These URLs do not cause problems during the exploration of a site as is the case with dynamic URLs.

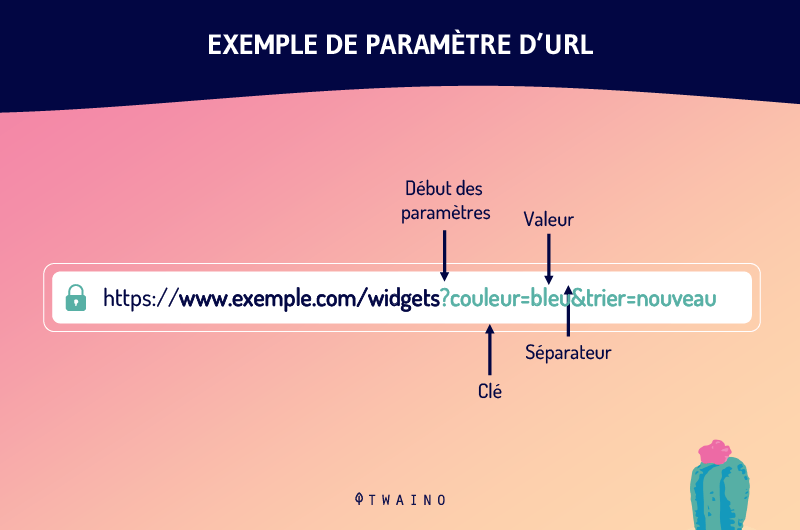

3.4. What is the URL parameter?

URL parameters are one of the causes of duplicate URLs. Still called URL variables, URL parameters are the variables that come after a question mark in a URL.

They are the pieces of information that define different features and classifications of a product or service. They can also determine the order in which information is displayed to a viewer.

Some of the most common use cases for URL parameters are:

- Filtering – For example, ? type=size, color=reg or ? price-range=25-40

- Identify – ? product=big-reg, categoryid=124 or itemid=24AU

- Translate – For example, ? lang=fr, ? language=de.

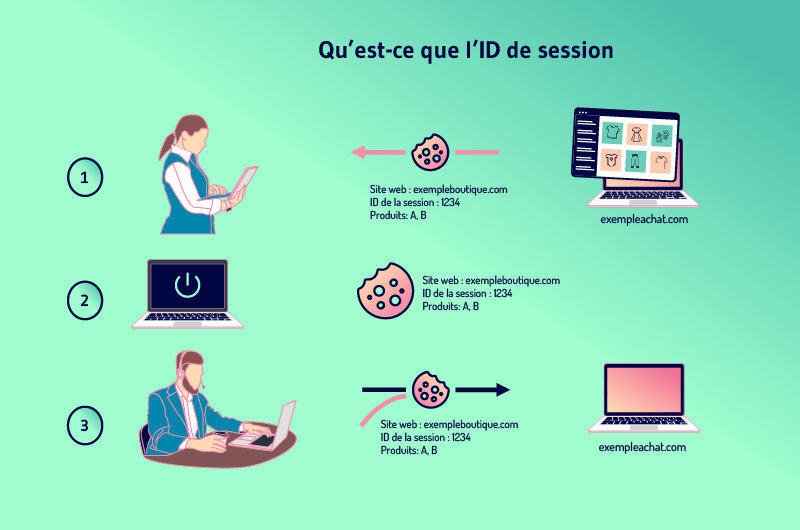

3.5. What is the session ID

Websites often want to keep track of user activity so that they can add products to a shopping cart for example. The way to do this is to give each visitor a session ID.

The session is nothing more than the history of what a user does on a site and the ID is specific to each visitor. In order to keep the session when a visitor leaves a page for another one, websites need to store the ID somewhere.

To do this, sites usually use cookies that store the ID on the visitor’s computer.

But when a visitor has previously disabled cookie storage, the session ID can be transferred from the server to the browser as a parameter attached to the page URL.

That said, all internal links on the site have the session ID added to their URL. New URLs are thus created as the visitor visits multiple pages. These new URLs are duplicates of the main URL and thus create the DUST problem.

3.6. Does Google penalize duplicate URLs?

Google does not penalize duplicate content or duplicate URLs, and Google employees point this out every time. John Muller, for example, stated:

“We don’t have a penalty for duplicate content. We don’t demote a site for having enough duplicate content.“

However, Google is working to discourage duplication that results from manipulation and is making adjustments in its rankings.

Although one might think that penalties do not implicitly concern DUST URLs, they do harm a website’s SEO as mentioned above

Conclusion

All in all, DUST is a common problem that most websites encounter. It is a set of URLs that gives access to the same page on a domain.

Most of the time, DUST doesn’t pose a big problem to the SEO of a site. However, technical errors that lead to the duplication of thousands of page URLs drain a site’s crawl budget and can impact its SEO efforts.

Fortunately, these problems can be avoided and URL duplication can be corrected by:

- 301 redirection;

- The implementation of meta robots;

- The rel=canonical tag

- The definition of passive URLs in the parameters of Google Searche Console.

The correction of DUST URLs can take quite some time and careful planning. Therefore, it is advisable for the webmaster to avoid duplicate URL situations as much as possible.

And now, tell us which of the ways mentioned in this article have already helped you to fix duplicate URLs.